AI Infrastructure Investment: The $200 Billion Data Center Boom

The global race to build AI infrastructure has ignited an unprecedented construction boom, with tech giants investing over $200 billion annually in data centers, specialized chips, and energy systems. From OpenAI's $500 billion Stargate initiative to Microsoft's next-generation facilities in Wisconsin, this infrastructure buildout will shape the AI era—but faces critical challenges around power, sustainability, and whether massive spending will generate proportional returns.

AI Infrastructure Investment: The $200 Billion Data Center Boom

The artificial intelligence revolution isn't happening in the cloud—it's happening in massive physical structures consuming as much electricity as small cities, filled with specialized chips worth millions of dollars each, cooled by systems pumping thousands of gallons of water per minute. As AI capabilities accelerate from impressive to indispensable, the infrastructure supporting these systems has become the battleground determining which companies, countries, and technologies will dominate the next decade of innovation.

November 2025 marks a critical inflection point in this infrastructure race. Tech giants are collectively spending over $200 billion annually constructing AI-optimized data centers across the United States and globally. Microsoft announced plans for an $80 billion investment in AI-enabled facilities. Amazon allocated $125 billion for 2025 capital expenditures, primarily targeting AI infrastructure. Google raised its spending forecast to $91-93 billion. Meta committed $70-72 billion for 2025 alone, with promises of even higher spending in 2026. These figures represent the largest infrastructure buildout in technology history, dwarfing previous investments in cloud computing, telecommunications, or internet backbone development.

The physical infrastructure powering AI: Data centers house millions of specialized processors in climate-controlled facilities

The physical infrastructure powering AI: Data centers house millions of specialized processors in climate-controlled facilities

The scale becomes even more dramatic when considering specific projects. OpenAI's Stargate initiative, partnering with Oracle and SoftBank, involves a $500 billion commitment to build 10 gigawatts of AI computing capacity across multiple U.S. sites by the end of 2025. The first facility in Abilene, Texas is already operational, with additional locations planned for New Mexico, Ohio, Wisconsin, and other Midwest states. Microsoft's Fairwater data center in Wisconsin spans 1.2 million square feet and features computing power ten times greater than current supercomputers. Amazon's Project Rainier houses 500,000 custom Trainium AI chips in a single facility, representing an $11 billion investment supporting partnerships with AI companies like Anthropic.

This article examines the forces driving the largest infrastructure investment in technology history: what's being built, who's building it, what challenges threaten timelines and returns, and whether this boom represents visionary preparation for AI's transformative potential or a speculative bubble that will leave billions in stranded assets.

The Scale of Investment: Breaking Down the $200 Billion

Understanding the AI infrastructure boom requires examining not just the dollar amounts but where the money flows and what it purchases. According to McKinsey analysis, global capital spending on AI-related infrastructure could reach $5.2 trillion between 2025 and 2030, with approximately $400 billion annually in 2025 alone. This spending primarily targets five interconnected categories:

Data Center Construction and Expansion: The most visible component involves building and expanding physical facilities. Unlike traditional data centers optimized for general computing, AI-specific facilities require fundamentally different designs. AI workloads generate vastly more heat—training models like ChatGPT can consume over 80 kilowatts per rack compared to 10-15 kilowatts for conventional servers. NVIDIA's latest GB200 chips may require up to 120 kilowatts per rack, necessitating advanced liquid cooling systems that are three times more efficient than air cooling but significantly more expensive to install and maintain.

Current construction spending on data centers approached $30 billion in 2024, accounting for 21% of total data center capital expenditures. This figure is accelerating rapidly in 2025 as multiple gigawatt-scale projects begin construction. Microsoft's Fairwater facility alone represents a $7 billion commitment. OpenAI's Stargate sites collectively involve investments exceeding $400 billion over several years. The construction timeline for these facilities typically spans 18-36 months, creating a lag between announced investments and operational capacity.

Geographical distribution reflects strategic considerations around power availability, land costs, connectivity, and regulatory environment. Northern Virginia remains the densest data center hub, but growth is spreading to Texas (abundant power, favorable regulations), Wisconsin (renewable energy potential, political support), Ohio (economic incentives), and New Mexico (space, energy resources). Internationally, China's "New Infrastructure" plan involves approximately $100 billion in AI-related investments, while Europe is mobilizing €200 billion for AI infrastructure to reduce dependency on U.S. providers.

Specialized AI Chips and Hardware: AI infrastructure demands processors fundamentally different from traditional CPUs. Graphics processing units (GPUs) dominate, accounting for 82% of AI chip revenue in 2024. NVIDIA remains the market leader, with its Blackwell and Hopper architectures powering most large-scale AI training. The company shipped millions of GPUs in 2025, with hyperscalers like AWS, Microsoft, and Meta placing orders worth billions of dollars.

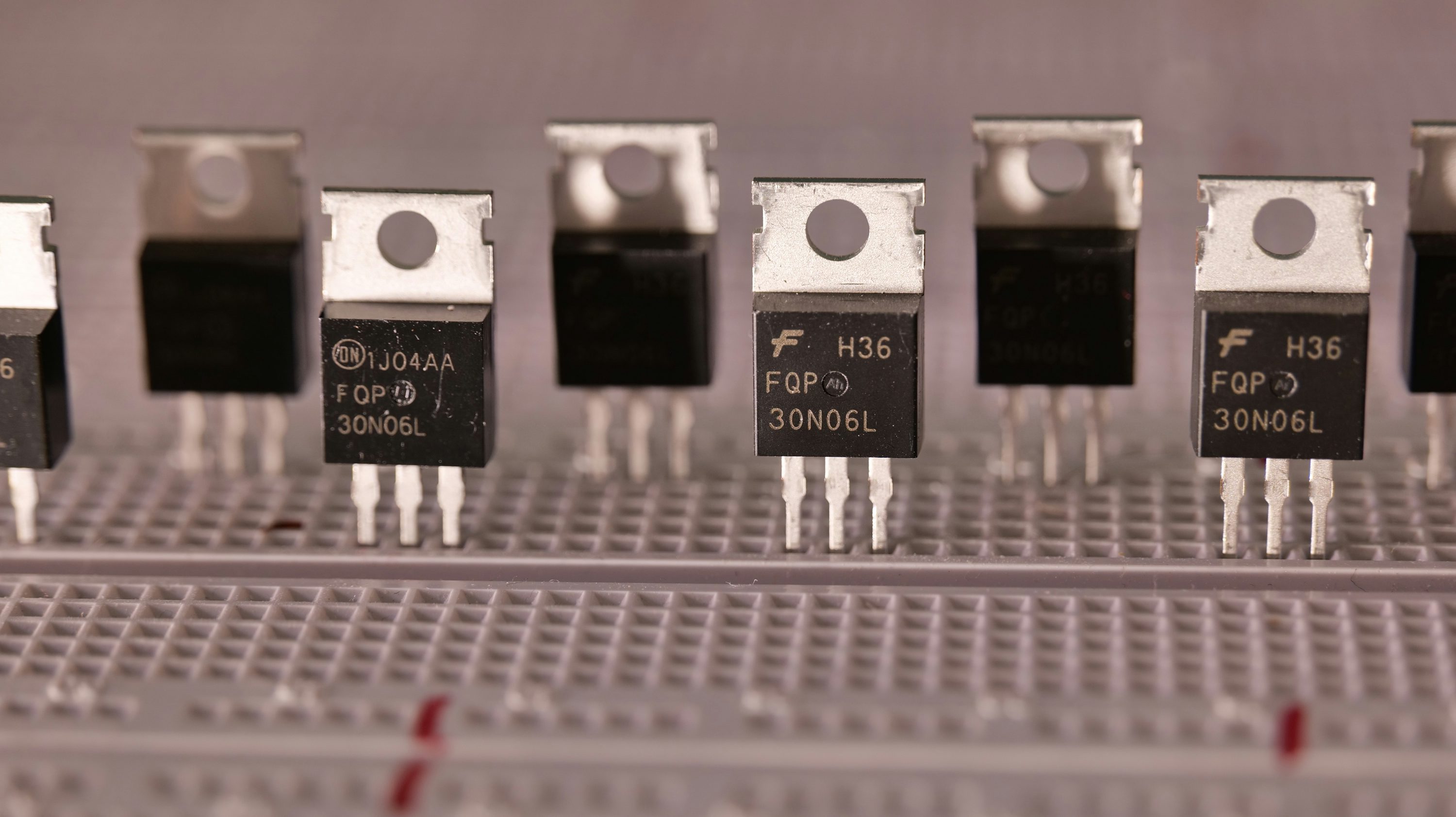

Specialized AI chips: The semiconductors powering artificial intelligence require cutting-edge manufacturing and design

Specialized AI chips: The semiconductors powering artificial intelligence require cutting-edge manufacturing and design

However, the AI chip landscape is diversifying. AMD's MI350 series is gaining traction, with Oracle ordering up to 130,000 MI355X GPUs. Qualcomm entered the market with AI200 and AI250 chips targeting inference workloads and aiming to reduce operational costs compared to training-focused GPUs. Meanwhile, hyperscalers are designing custom silicon: Google's Tensor Processing Units (TPUs), Amazon's Trainium and Inferentia chips, and Microsoft's partnerships with AMD and others reflect strategies to reduce dependency on NVIDIA while optimizing for specific workloads.

The economics are staggering. High-end AI GPUs cost $30,000-50,000 per unit, with data centers housing hundreds of thousands. A single facility might contain $5-10 billion in chip hardware alone. Supply chains are strained, with advanced packaging capacity from TSMC and high-bandwidth memory from Samsung and SK Hynix becoming bottlenecks. These constraints have delayed deployments and given companies with secured supply substantial competitive advantages.

Power and Energy Infrastructure: AI's energy demands represent perhaps the most significant infrastructure challenge. Training models requires massive continuous power—30 megawatts or more for large facilities. The International Energy Agency reports that U.S. data centers consumed 183 terawatt-hours in 2024, with projections reaching 426 terawatt-hours by 2030 as AI workloads multiply. Globally, AI data centers could account for 3.4% of CO₂ emissions in 2025, raising profound sustainability concerns.

Power availability is now the primary constraint on data center location decisions. Utilities are struggling to meet demand, with grid interconnection queues stretching years and transmission infrastructure requiring lengthy approval processes. This has driven tech companies into direct energy investments. Microsoft is exploring partnerships with nuclear power providers for carbon-free baseload electricity. Amazon has invested in renewable energy projects totaling gigawatts of capacity. Meta and Google are pursuing similar strategies, recognizing that power availability will determine competitive position as much as chip supply or engineering talent.

The energy costs are substantial and ongoing. At current electricity rates, powering a large AI facility costs millions of dollars monthly. Studies project that data center growth could increase average U.S. electricity bills by 8% by 2030, potentially creating political resistance to further expansion in some regions. Environmental groups are raising concerns about fossil fuel use and water consumption, with U.S. data centers using 17 billion gallons of water in 2023, potentially rising to 33 billion by 2028 as cooling demands increase.

Networking and Connectivity: AI workloads require high-speed connections both within data centers (between servers for distributed training) and between data centers (for data transfer and model deployment). This has driven investments in networking equipment, with Ethernet switches and routers representing a growing share of capital spending. Advanced networking technologies like Infiniband and high-speed optical interconnects are becoming standard in AI facilities, adding hundreds of millions to costs for large deployments.

Talent and Operational Infrastructure: Building and operating AI infrastructure requires specialized expertise. Top AI researchers command compensation packages exceeding $1 million annually. Data center engineers, electrical specialists, and operations teams represent significant ongoing costs. Meta increased headcount by 8% year-over-year to 78,450 employees despite recent layoffs, with much of the growth in AI talent. Microsoft, Amazon, and Google are similarly expanding AI-focused teams, creating fierce competition for limited talent pools.

The physical buildout: Massive construction projects are transforming the data center landscape across the United States

The physical buildout: Massive construction projects are transforming the data center landscape across the United States

Who's Building What: The Major Players and Projects

The AI infrastructure boom involves a complex ecosystem of builders, from established tech giants to specialized data center operators, energy companies, and semiconductor manufacturers. Understanding the major players and their strategies reveals different approaches to capturing AI infrastructure value.

Hyperscaler-Led Investments: Amazon, Microsoft, and Google—the "Big Three" cloud providers—are leading infrastructure spending. Amazon's $125 billion in 2025 capital expenditures primarily funds AWS data center expansion, adding 3.8 gigawatts of computing capacity over the past 12 months with plans for another gigawatt in Q4 and doubling capacity by 2027. AWS maintains market leadership with a $132 billion annualized revenue run rate, and management describes AI as the "single largest driver of new cloud workloads."

Microsoft's approach emphasizes custom AI partnerships. The company's $80 billion investment in AI-enabled data centers includes specific commitments to OpenAI, providing guaranteed compute capacity for model development. Microsoft's Fairwater facility in Wisconsin showcases next-generation design with closed-loop liquid cooling systems reducing water waste while enabling high-density GPU deployments. The company plans to increase AI capacity by over 80% in 2025 and double its data center footprint over the next two years, though ongoing capacity constraints are limiting Azure's ability to fully meet demand.

Google's strategy leverages proprietary hardware and software integration. The company's TPUs provide competitive advantages in efficiency and cost for certain workloads. Google Cloud revenue grew 35% year-over-year in Q3 2025, reaching $15.15 billion, with a backlog of $155 billion (up 46% quarter-over-quarter) indicating sustained enterprise demand. Google raised its 2025 capital expenditure forecast to $91-93 billion, the third increase this year, prioritizing AI infrastructure to capture growing market share from AWS and Azure.

OpenAI's Stargate Initiative: Perhaps the most ambitious single project, Stargate represents a partnership between OpenAI, Oracle, and SoftBank to build 10 gigawatts of AI computing capacity—more than the current total capacity of many cloud providers. The first site in Abilene, Texas is operational, with additional facilities planned for Shackelford County (Texas), Doña Ana County (New Mexico), Lordstown (Ohio), Saline Township (Michigan), and Wisconsin locations.

The $500 billion investment over multiple years involves not just data centers but entire supporting ecosystems: dedicated power infrastructure, cooling systems, networking, and long-term chip supply agreements with NVIDIA. The project is expected to create over 25,000 jobs across construction and operations. Oracle's involvement brings cloud infrastructure expertise, while SoftBank contributes energy and telecommunications capabilities. The scale suggests OpenAI's confidence in sustained AI model development requiring ever-larger training runs, though critics question whether demand will materialize to justify such enormous fixed costs.

Meta's Long-Term AI Bets: Meta's $70-72 billion in 2025 capital expenditures, with promises of higher spending in 2026, reflects CEO Mark Zuckerberg's willingness to invest aggressively despite market skepticism. The company is building data center capacity to support AI-powered advertising systems, Reels recommendations, and emerging products like Ray-Ban Meta smart glasses. Meta secured $27 billion in debt financing for infrastructure investments, indicating long-term commitment regardless of near-term profitability pressures.

Meta's approach differs from cloud providers—the infrastructure primarily serves internal applications rather than external customers. This concentrates risk, as returns depend entirely on Meta's ability to monetize AI within its advertising and social media businesses. However, it also provides strategic flexibility, allowing the company to optimize infrastructure specifically for its workloads without compromising for diverse customer needs.

Specialized Data Center Operators and Partnerships: Beyond hyperscalers, specialized companies are capturing infrastructure value. A consortium led by BlackRock, including NVIDIA and Microsoft, acquired Aligned Data Centers for $40 billion in October 2025, one of the largest deals in history. This transaction highlights the investment appeal of data center assets, with financial firms viewing AI infrastructure as a strategic asset class offering long-term returns.

Construction firms are also benefiting. Bechtel announced partnerships with NVIDIA to accelerate AI data center construction through modularization, reducing build times and improving reliability. This approach treats data centers as manufacturing products rather than custom construction projects, potentially addressing capacity constraints faster than traditional methods.

Semiconductor Supply Chain: The infrastructure boom extends beyond data centers to chip manufacturing. NVIDIA's market capitalization has ballooned to over $3 trillion, driven primarily by AI chip demand. However, the company faces supply constraints from TSMC's advanced packaging capacity and high-bandwidth memory shortages from Samsung and SK Hynix. Taiwan Semiconductor Manufacturing Company (TSMC) is investing tens of billions to expand capacity, but lead times for advanced nodes remain 12-18 months, creating bottlenecks that constrain infrastructure deployment regardless of capital availability.

Critical Challenges: Power, Sustainability, and Economic Risks

Despite the momentum, significant challenges threaten timelines, returns, and even the feasibility of planned expansions:

Power Grid Limitations: Electricity availability is emerging as the primary constraint. Training models like ChatGPT requires continuous power equivalent to thousands of homes. Utilities face a daunting challenge: U.S. data center demand is projected to double from 35 gigawatts to 78 gigawatts by 2035, straining grids already stressed by electrification trends in transportation and heating. Approval processes for new transmission lines can take four years or longer, creating mismatches between data center construction timelines (18-36 months) and power infrastructure readiness.

Regional disparities are emerging. Some areas, particularly in the Southeast and Texas, have relatively accommodating power utilities and regulatory environments. Others, including parts of California and the Northeast, face political and environmental resistance to major power infrastructure expansions. This is creating geographic clustering that may prove unsustainable if local resources become overwhelmed.

The political economy of power is also shifting. Local communities are questioning whether hosting energy-intensive data centers that employ relatively few permanent workers justifies impacts on electricity prices, environmental resources, and quality of life. Public opinion surveys show that 25% of Americans view AI's environmental impact negatively, citing energy use and resource depletion. Local opposition has emerged in Indiana, West Virginia, and other locations, sometimes blocking or delaying projects through zoning challenges and regulatory processes.

Sustainability and Environmental Concerns: AI infrastructure's environmental footprint is becoming a reputational and regulatory risk. Data centers are projected to account for 3.4% of global CO₂ emissions in 2025, a figure that could grow substantially if current expansion trends continue. Water consumption for cooling is another flashpoint—particularly in arid regions experiencing droughts, using billions of gallons annually for data centers raises ethical and practical questions about resource allocation.

Tech companies are responding with sustainability commitments. Microsoft pledges carbon-free energy matching for its data centers. Amazon is investing heavily in renewable energy projects. Google aims for 24/7 carbon-free energy at all facilities by 2030. However, the scale of demand is growing faster than renewable capacity can expand, forcing reliance on natural gas and other fossil fuels in the interim. Some facilities are exploring waterless cooling technologies and modular designs that reduce construction waste, but these solutions add costs and complexity.

Regulatory pressures are mounting. The European Union's AI Act includes requirements for 40% heat reuse efficiency for large data centers, forcing design changes. Environmental groups are advocating for stricter regulations in the U.S., potentially leading to requirements that slow construction or increase costs. Companies failing to address sustainability concerns proactively risk regulatory crackdowns, reputational damage, and political resistance to further expansion.

Workforce Shortages and Supply Chain Disruptions: Building data centers at the current pace requires skilled labor—electricians, engineers, construction workers, specialized technicians—in quantities that exceed available supply. Deloitte surveys indicate that 72% of data center executives cite workforce shortages as extremely challenging, with high turnover and competition for talent delaying projects. Training new workers takes time, creating bottlenecks that capital alone can't solve.

Supply chains for critical components face similar strains. Tariffs on steel, solar modules, and other materials have increased costs and created uncertainty. Lead times for specialized equipment like high-voltage transformers, cooling systems, and networking gear have extended, sometimes by months. Semiconductor supply constraints, particularly for high-bandwidth memory and advanced packaging, remain acute despite capacity expansions. These bottlenecks mean that even companies with capital and power availability may face delays in achieving operational capacity.

Economic Risks and Stranded Assets: The most fundamental question is whether AI demand will justify the infrastructure being built. McKinsey estimates suggest that 60% of AI data center capital expenditures flow to technology developers like NVIDIA and Intel, but if AI adoption disappoints or efficiency gains (such as those demonstrated by DeepSeek's reduced training costs) diminish hardware requirements, massive investments could generate insufficient returns.

History offers cautionary lessons. The telecommunications industry overbuilt fiber optic networks during the late 1990s, leading to bankruptcies and stranded assets when demand didn't materialize on expected timelines. Even if AI ultimately transforms the economy as predicted, the timing and path matter enormously—capacity built too early or in wrong configurations may prove unprofitable.

The circular financing arrangements raise additional concerns. NVIDIA investing $100 billion in OpenAI, which then purchases NVIDIA chips with that capital, creates demand that may not reflect genuine market needs. Meta securing $27 billion in debt financing raises questions about whether spending reflects actual customer demand or speculative positioning. If AI revenues don't materialize quickly enough to service debt and justify continued investment, financial distress could cascade through the ecosystem.

Market concentration is another risk. If a few companies control the infrastructure, they could exercise pricing power that limits AI adoption or creates dependence dangerous for customers and the broader economy. Alternatively, competition could drive prices down, threatening returns on capital-intensive investments. The balance between profitability and market access will determine long-term industry structure.

The Stakes: What This Infrastructure Boom Means

The AI infrastructure boom has implications extending far beyond technology:

Economic Opportunity and Risk: The investments are creating jobs—OpenAI's Stargate alone will generate 25,000 positions, and broader construction and operations employment numbers in the tens of thousands. Spending supports industries from construction to networking equipment to semiconductor manufacturing. However, if the infrastructure proves overbuilt or AI monetization disappoints, economic losses could be substantial. The Bank of England and International Monetary Fund have warned that AI valuations have reached "dot-com peak levels," increasing risks of sharp corrections that would impact retirement accounts, pension funds, and economic confidence.

Geopolitical Competition: Infrastructure capacity is becoming a measure of national competitiveness. The United States currently hosts 51% of global hyperscale data centers, maintaining technological leadership. China's investments aim to reduce dependence on Western technology and infrastructure. Europe is attempting to build sovereign AI capacity to avoid reliance on U.S. or Chinese providers. The infrastructure buildout occurring now will determine which countries and companies lead AI development for decades, with profound implications for economic and military power.

Innovation Access and Equity: The capital requirements for competitive AI infrastructure—$70-125 billion annually—create barriers favoring large incumbents. Startups and smaller companies depend on cloud access, potentially concentrating AI capabilities and limiting innovation. However, historical precedents like AWS enabling thousands of startups suggest that dominant infrastructure providers often enable broader ecosystems. Whether today's investments will democratize AI access or entrench existing power structures remains uncertain.

Climate and Sustainability: AI infrastructure's environmental impact will shape public opinion and political support for further AI development. If the industry successfully transitions to sustainable energy and water-efficient cooling, AI can scale with manageable environmental costs. If not, backlash could limit growth and create regulatory barriers that stifle innovation. The decisions being made now about power sources, cooling technologies, and facility design will determine AI's environmental legacy.

Conclusion: Building the Foundation or Inflating a Bubble?

The AI infrastructure boom represents the largest technology investment in history—a $200+ billion annual commitment to building the physical foundation for artificial intelligence. Tech giants are constructing gigawatt-scale data centers, ordering millions of specialized chips, securing massive power allocations, and hiring thousands of engineers in a race to capture AI's transformative potential.

The optimistic scenario sees this infrastructure enabling breakthrough applications that justify current spending many times over—AI-powered drug discovery, climate solutions, educational transformation, and productivity gains across every industry. The infrastructure being built today becomes the backbone of decades of innovation, much as internet infrastructure from the 1990s-2000s enabled the digital economy.

The pessimistic scenario sees overinvestment outpacing actual demand, with companies burning capital on speculative bets that fail to monetize quickly enough. Circular financing arrangements collapse when AI revenues disappoint. Power grid limitations and environmental resistance slow deployments. Markets correct sharply as investors reassess AI valuations, leading to write-downs, project cancellations, and stranded assets.

The most likely outcome probably lies between these extremes. AI will deliver transformative value in specific domains, justifying substantial infrastructure investment—but current spending may overshoot near-term demand, leading to a period of disappointing returns before eventually validating long-term theses. This would mirror other major technological transitions like cloud computing, which took years to fully monetize after massive initial investments.

What's certain is that the decisions being made now—where to build, how much to spend, what technologies to deploy—will shape the AI era's winners and losers. Companies, countries, and investors who navigate the boom wisely will capture enormous value. Those who misjudge the timing, scale, or direction risk billions in losses. The AI infrastructure boom is simultaneously the foundation of the future and one of the greatest speculative bets in technology history. November 2025 marks a critical moment in this unfolding story—the point when promises must begin delivering results.

-

The Economist. (2025, September 30). The murky economics of the data-centre investment boom. https://www.economist.com/business/2025/09/30/the-murky-economics-of-the-data-centre-investment-boom

-

Reuters. (2025, October 15). BlackRock, Nvidia buy Aligned Data Centers in $40 billion deal. https://www.reuters.com/legal/transactional/blackrock-nvidia-buy-aligned-data-centers-40-billion-deal-2025-10-15/

-

OpenAI. (2025, September 23). Five new Stargate sites announced. https://openai.com/index/five-new-stargate-sites/

-

Business Insider. (2025, October). Big Tech could spend $320 billion on AI infrastructure in 2025. https://www.businessinsider.com/big-tech-ai-capex-infrastructure-data-center-wars-2025-10

-

Microsoft Blog. (2025, September 18). Inside the world's most powerful AI datacenter. https://blogs.microsoft.com/blog/2025/09/18/inside-the-worlds-most-powerful-ai-datacenter/

-

CNBC. (2025, September 23). OpenAI's first data center in $500 billion Stargate project up in Texas. https://www.cnbc.com/2025/09/23/openai-first-data-center-in-500-billion-stargate-project-up-in-texas.html

-

The Network Installers. (2025). AI data center statistics and trends. https://thenetworkinstallers.com/blog/ai-data-center-statistics/

-

Pew Research Center. (2025, October 24). What we know about energy use at US data centers amid the AI boom. https://www.pewresearch.org/short-reads/2025/10/24/what-we-know-about-energy-use-at-us-data-centers-amid-the-ai-boom/

-

NPR. (2025, October 14). Google, AI, data centers: Growth vs. environment and electricity. https://www.npr.org/2025/10/14/nx-s1-5565147/google-ai-data-centers-growth-environment-electricity

-

IDTechEx. (2025). Scaling the silicon: Why GPUs are leading the AI data center boom. https://www.idtechex.com/en/research-article/scaling-the-silicon-why-gpus-are-leading-the-ai-data-center-boom/33638

-

Deloitte. (2025). Data center infrastructure and artificial intelligence challenges. https://www.deloitte.com/us/en/insights/industry/power-and-utilities/data-center-infrastructure-artificial-intelligence.html

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Markets and competitive dynamics can change rapidly in the technology sector. Taggart is not a licensed financial advisor and does not claim to provide professional financial guidance. Readers should conduct their own research and consult with qualified financial professionals before making investment decisions.

Taggart Buie

Writer, Analyst, and Researcher