AMD's AI Ambitions: A Serious Challenge to NVIDIA?

AMD has been steadily building its AI chip portfolio. This deep dive examines AMD's MI300 series, software ecosystem development, and strategic partnerships to determine if they can truly compete with NVIDIA's CUDA dominance.

AMD's AI Ambitions: A Serious Challenge to NVIDIA?

For years, NVIDIA has dominated the artificial intelligence chip market with a near-monopoly that seemed unbreakable. But one competitor has consistently invested, iterated, and improved its AI offerings with the resources and determination to pose a credible challenge: Advanced Micro Devices, better known as AMD. As we move through 2025, AMD's AI ambitions have reached a critical inflection point. The company's MI300 series accelerators are gaining traction, its software ecosystem is maturing, and major customers are giving AMD's technology serious consideration. The question is no longer whether AMD can compete in AI—it clearly can—but rather how much market share it can capture and whether it can truly challenge NVIDIA's dominance.

AMD's journey in AI acceleration has been marked by persistence in the face of skepticism. While NVIDIA built its empire on GPUs that evolved from gaming graphics to AI workhorses, AMD had to deliberately pivot its focus to AI, develop specialized hardware, and convince customers to bet on an alternative to the established leader. The company's recent progress suggests this long-term strategy may finally be paying dividends.

The Foundation: AMD's AI Heritage and Evolution

AMD's path to becoming a serious AI chip contender wasn't straightforward. The company has long been NVIDIA's primary competitor in graphics processors for gaming, but for years, AMD's GPUs lagged behind NVIDIA's offerings for AI and machine learning workloads. NVIDIA's early investment in CUDA—its proprietary parallel computing platform—gave it an enormous head start that AMD struggled to overcome.

The turning point came with AMD's decision to develop specialized AI accelerators rather than relying solely on repurposed gaming GPUs. The Instinct line, launched in 2016, represented AMD's first serious attempt at AI-specific hardware. Early generations of Instinct accelerators received mixed reviews—they offered competitive raw performance in some scenarios but suffered from immature software support and limited ecosystem integration.

AMD's acquisition of Xilinx in 2022 for $35 billion proved transformative for its AI strategy. Xilinx brought not just technology in field-programmable gate arrays (FPGAs) and adaptive computing, but also critical expertise in data center systems, AI inference workloads, and relationships with hyperscale cloud providers. This acquisition gave AMD capabilities that complemented its GPU heritage and positioned it to offer more comprehensive AI solutions.

The company's MI200 series, released in 2021, represented a significant step forward. These chips used AMD's CDNA 2 architecture, purpose-built for AI and high-performance computing rather than adapted from gaming. They offered impressive performance for certain AI workloads and found adoption in supercomputers and research institutions. However, the MI200 series still faced the fundamental challenge all NVIDIA competitors face: the software ecosystem gap.

The MI300 Series: AMD's Flagship AI Challenge

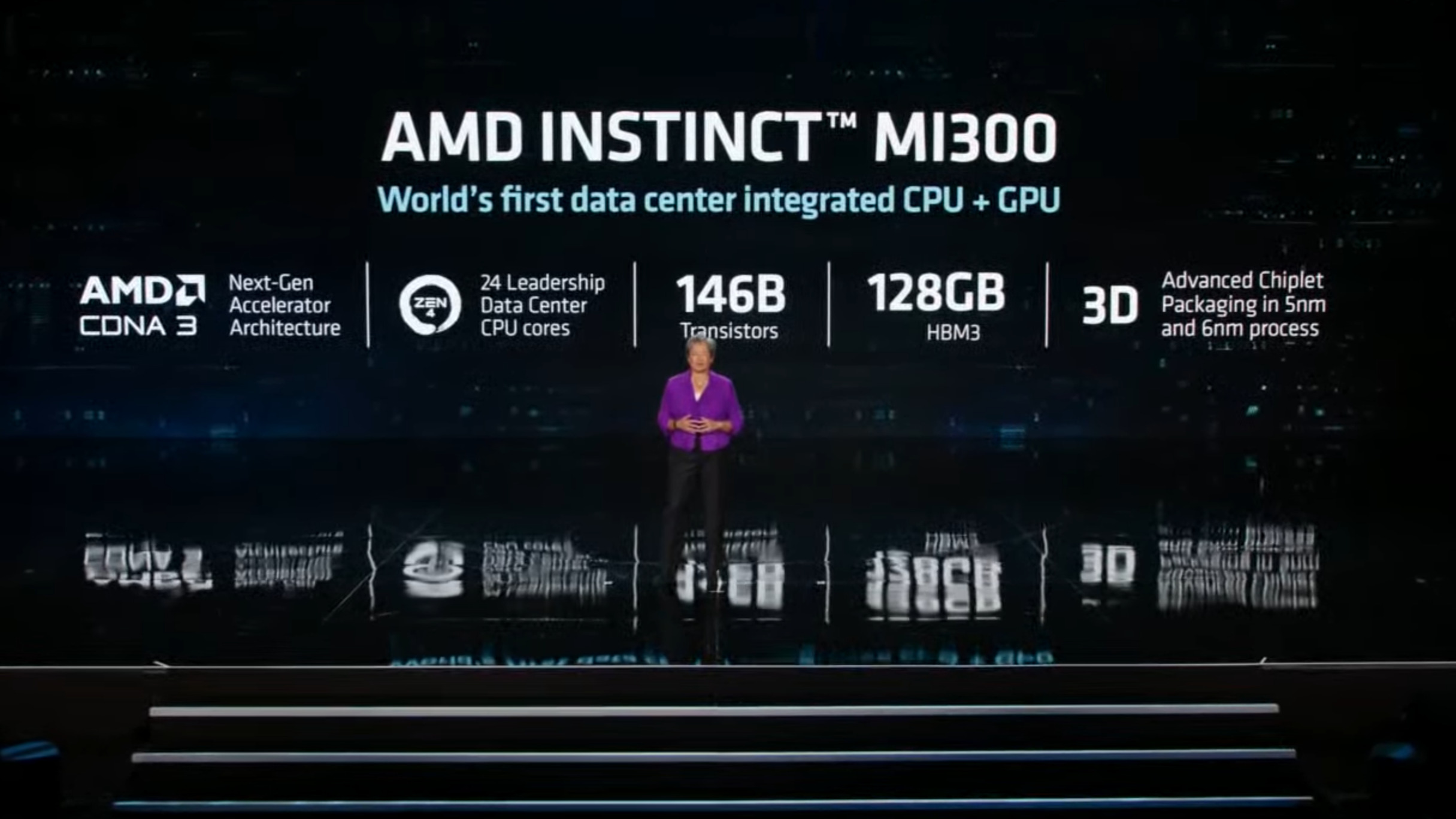

AMD's MI300 series, particularly the MI300X announced in 2023 and ramping production through 2024-2025, represents the company's most ambitious and capable AI accelerator to date. This isn't an incremental improvement—it's a ground-up redesign incorporating lessons from previous generations and leveraging AMD's full technology portfolio.

The MI300X's architecture is innovative in several respects. It uses a chiplet design—multiple smaller chips combined on a single package—that provides manufacturing advantages and allows AMD to combine different types of silicon optimally. The chip integrates high-bandwidth memory (HBM) directly with compute chiplets, dramatically reducing the latency and energy costs of moving data between memory and processors.

The specifications are genuinely impressive. The MI300X features 192GB of HBM3 memory—significantly more than NVIDIA's H100 (80GB) or even the H200 (141GB). This memory advantage matters enormously for large language models and other AI applications where model size is constrained by available memory. AMD can fit larger models on a single accelerator or process larger batches, potentially providing throughput advantages.

The MI300X also delivers substantial computational power, with AMD claiming leadership in several AI benchmark workloads. The chip provides 1.3 petaflops of AI compute performance using FP16 precision, competitive with NVIDIA's best offerings. Power efficiency—performance per watt—is also competitive, crucial for data centers where electricity and cooling costs significantly impact total cost of ownership.

Beyond raw specifications, AMD made strategic architectural choices aimed at AI workloads. The MI300X optimizes for transformer models—the architecture behind ChatGPT and most modern large language models. AMD recognized that transformers have become the dominant AI architecture and designed specifically for their computational patterns, rather than building general-purpose accelerators that happen to run transformers.

The MI300A variant takes a different approach, integrating AMD's Zen 4 CPU cores with the same GPU architecture in a single package. This unified architecture aims to address workloads that need both CPU and GPU compute, potentially offering efficiency advantages for certain applications. While the MI300X has garnered more attention for training large AI models, the MI300A could find niches in edge AI, inference, and hybrid workloads.

The Software Ecosystem: Closing the CUDA Gap

Hardware performance alone doesn't win in AI acceleration—the software ecosystem proves equally critical. NVIDIA's CUDA platform represents perhaps its strongest competitive moat, with thousands of optimized libraries, frameworks, and tools built over nearly two decades. Every AI researcher and ML engineer learns CUDA; every major AI framework optimizes for it first. This ecosystem advantage has doomed many technically capable NVIDIA competitors who couldn't offer comparable software experiences.

AMD understands this challenge and has invested substantially in its ROCm (Radeon Open Compute) platform—its answer to CUDA. ROCm has evolved from a rough, barely documented tool for enthusiasts to a increasingly mature platform that supports major AI frameworks and offers performance approaching CUDA in many scenarios.

ROCm's approach differs philosophically from CUDA. While NVIDIA's platform is proprietary and tightly controlled, ROCm embraces open standards where possible. AMD has open-sourced significant portions of ROCm and works to ensure compatibility with open standards like OpenCL and SYCL. This openness appeals to some customers who prefer avoiding vendor lock-in, though it also means AMD exercises less control over the developer experience.

Recent ROCm releases have made significant progress on the usability and performance front. The platform now provides relatively seamless support for PyTorch, TensorFlow, and other popular AI frameworks. AMD has worked directly with framework developers and major AI companies to optimize popular models for MI300 series hardware. When Microsoft, Meta, or Oracle deploy AMD accelerators, they're not left to figure out optimization alone—AMD's software teams actively collaborate on getting workloads running efficiently.

AMD has also built migration tools to help developers port CUDA code to ROCm. The HIPIFY tool can automatically translate many CUDA programs to run on AMD hardware. While not all code translates perfectly, and some manual optimization remains necessary, these tools lower the barrier to adopting AMD accelerators, particularly for organizations with existing CUDA codebases.

The ecosystem gap hasn't closed entirely. CUDA still offers more libraries, better documentation, more community support, and deeper optimization for the latest models. An AI startup building on NVIDIA can find extensive tutorials, community forums, and readily available expertise. The same startup choosing AMD faces more friction—fewer resources, less community knowledge, and potentially more troubleshooting. For greenfield AI projects, NVIDIA remains the path of least resistance.

However, the gap has narrowed sufficiently that for major organizations with dedicated AI teams, AMD has become viable. When Meta, Microsoft, or large enterprises evaluate AMD accelerators, they have the resources to work through software challenges and optimize specifically for AMD hardware. The economic incentives—better pricing, more memory, reduced dependence on a single supplier—increasingly justify the additional software effort.

Strategic Partnerships: Building Market Presence

AMD has pursued an aggressive partnership strategy to establish market presence for its AI accelerators. Rather than trying to win customers one by one through direct sales alone, AMD has cultivated relationships with the most influential adopters—cloud hyperscalers and AI giants whose choices influence the broader market.

Microsoft has emerged as perhaps AMD's most important AI accelerator partner. Microsoft Azure offers AMD MI300X-based virtual machine instances, giving developers access to AMD accelerators through the cloud. More significantly, Microsoft is reportedly using AMD chips for some of its own AI workloads, including aspects of Bing AI and other services. Having Microsoft's endorsement provides enormous credibility and demonstrates that AMD's technology can handle real-world, production AI workloads at massive scale.

Meta (Facebook) has also announced significant AMD adoption. The company purchased substantial quantities of MI300X accelerators for AI training and inference workloads. Meta's willingness to diversify away from NVIDIA—driven by supply constraints, desire for negotiating leverage, and technical evaluation of AMD's capabilities—represents exactly the kind of customer win AMD needs. Meta's engineers have worked with AMD on software optimization and publicly discussed their experiences, providing valuable proof points for other potential customers.

Oracle Cloud Infrastructure has integrated AMD Instinct accelerators into its cloud offerings. Oracle's positioning as a enterprise-focused cloud provider creates opportunities for AMD in markets where NVIDIA's gaming-centric brand and supply allocation to major hyperscalers may leave enterprise customers underserved.

Importantly, AMD has avoided the trap of offering its accelerators solely through its own channels. By working with cloud providers and OEMs (original equipment manufacturers) like Dell, HPE, and Supermicro, AMD ensures that customers can acquire MI300-based systems through established procurement relationships. This channel strategy helps AMD reach customers who might not engage directly with semiconductor vendors.

The partnership strategy extends beyond customers to include collaboration with AI software companies. AMD works with companies developing AI frameworks, tools, and applications to ensure their offerings run well on AMD hardware. These partnerships help build the broader ecosystem that makes AMD accelerators viable for more use cases.

Pricing and Economics: The Value Proposition

One of AMD's strongest competitive weapons is pricing and value proposition. While exact pricing varies based on volume commitments and specific configurations, AMD generally prices its AI accelerators 15-30% below comparable NVIDIA offerings. Given that leading AI accelerators cost $25,000-40,000 per chip, these discounts can amount to substantial savings when purchasing thousands of chips for data center deployments.

Beyond list pricing, AMD provides value through memory capacity. The MI300X's 192GB of HBM3 memory represents a significant advantage over NVIDIA's options. For organizations running large language models or other memory-intensive AI workloads, this extra memory can eliminate the need for complex multi-chip configurations, reducing system complexity and cost while improving performance. The value of this memory advantage is difficult to quantify precisely but can be substantial—enabling workloads that simply won't fit on competing chips.

Power efficiency represents another economic consideration. While AMD and NVIDIA trade leadership depending on specific workloads and metrics, AMD's chips are generally competitive in performance per watt. In large-scale deployments where electricity and cooling costs can exceed hardware costs over the system lifetime, efficiency advantages translate directly to lower total cost of ownership.

AMD has also shown flexibility in working with large customers on pricing, terms, and customization. Companies like Microsoft and Meta negotiate directly with AMD on volume purchases, likely securing pricing even more attractive than standard rates. This flexibility—perhaps easier for AMD as the challenger than for NVIDIA as the dominant supplier with backlogs—helps AMD win competitive evaluations.

The value proposition extends to supply availability. Throughout 2023-2024, NVIDIA's AI accelerators faced severe shortages, with lead times stretching 6-12 months. AMD, while also capacity-constrained, generally offered better availability. For companies unable to secure NVIDIA supply or unwilling to wait, AMD provided an alternative path to deploying AI infrastructure. This availability advantage may diminish as TSMC and other foundries expand capacity, but during the critical growth phase of AI deployment, supply access has proven as valuable as raw performance.

Technical Differentiators: Where AMD Excels

Beyond competitive pricing, AMD's MI300 series offers technical advantages in specific scenarios that strengthen its competitive position.

The memory capacity advantage has already been mentioned but deserves emphasis. Large language models continue growing in size—GPT-4 and similar models have hundreds of billions of parameters. As models grow, memory becomes the critical bottleneck. AMD's 192GB of memory per accelerator allows fitting larger models or processing larger batches without resorting to model parallelism across multiple chips. This can provide both performance advantages and simplified software architecture.

AMD's chiplet architecture provides manufacturing advantages that could prove important over time. Rather than fabricating a single monolithic chip, AMD combines multiple smaller chiplets. This approach improves manufacturing yields (smaller chips have fewer defects), allows mixing different silicon technologies (e.g., different memory types or process nodes), and provides flexibility in configuring different product variants from common building blocks. These advantages could allow AMD to iterate faster and offer more customized solutions than competitors constrained by monolithic designs.

The MI300A variant's integration of CPU and GPU in a single package creates opportunities in specific workloads. Applications that need both types of compute—for example, autonomous vehicle systems processing sensor data, running AI models, and handling control algorithms—might benefit from this unified architecture. While this hasn't yet proven a major market driver, it represents differentiation that could become more valuable as AI applications diversify beyond pure training and inference.

AMD has emphasized open standards and interoperability, which appeals to certain customer segments. Organizations concerned about vendor lock-in or those with complex, heterogeneous computing environments may prefer AMD's more open approach over NVIDIA's tighter ecosystem control. This philosophical difference won't sway all customers, but for those it matters to, it provides meaningful differentiation.

The Challenges AMD Still Faces

Despite impressive progress, AMD confronts significant challenges in its quest to seriously challenge NVIDIA's AI dominance.

The software ecosystem gap, while narrowed, persists. CUDA's maturity, documentation, community support, and depth of optimization remain superior to ROCm. For smaller organizations without dedicated teams to handle platform-specific optimization, NVIDIA remains the easier choice. The vast corpus of CUDA code, tutorials, and community knowledge creates friction for AMD adoption that pricing alone can't fully overcome.

NVIDIA isn't standing still. The company continues innovating aggressively, with its H200 offering improved memory over the H100, and the upcoming Blackwell architecture (B100/B200) promising substantial performance improvements. AMD's MI300 series competes well with NVIDIA's current generation, but maintaining competitiveness requires AMD to continue investing heavily in R&D and iteration. NVIDIA's larger revenue and R&D budget provide resources to sustain rapid innovation.

Manufacturing constraints affect AMD as well. While AMD offers better supply availability than NVIDIA in some periods, both companies depend primarily on TSMC for leading-edge manufacturing. AMD must secure foundry capacity in competition not just with NVIDIA but with Apple, Qualcomm, and other major TSMC customers. Any future supply constraints at TSMC impact AMD's ability to scale production.

Brand perception in the AI community favors NVIDIA. NVIDIA built its reputation as the AI chip leader over years of dominance. When new AI companies form or researchers choose platforms, NVIDIA is the default. AMD must overcome this presumption through demonstration, customer success stories, and word-of-mouth—a slower process than simply having the best technology.

The data center ecosystem has deeply integrated NVIDIA hardware. Data center operators, system integrators, and cloud providers have developed expertise, built infrastructure, and established processes around NVIDIA's products. Swapping in AMD alternatives requires not just chip replacement but potentially changes to software, cooling, networking, and management infrastructure. These switching costs create friction even when AMD offers technically competitive and economically attractive alternatives.

Market Share: Current State and Trajectory

AMD's current AI accelerator market share remains modest compared to NVIDIA's dominance, but it's growing from a small base. Estimates suggest AMD holds roughly 5-10% of the AI accelerator market in 2025, depending on how that market is defined and measured. This represents meaningful progress from effectively zero just a few years ago, but also illustrates how far AMD must go to truly challenge NVIDIA's 80-90% share.

The trajectory matters more than the current position. AMD's share is growing as MI300 production ramps and as major customers deploy systems at scale. If AMD can sustain growth and reach 15-20% market share by 2027-2028, it would represent a significant achievement and establish AMD as a permanent fixture in the AI infrastructure landscape rather than a niche player.

Different market segments show varying AMD penetration. In hyperscale cloud deployments, where Microsoft, Meta, and Oracle have adopted AMD, market share is higher. In AI startups and academic research—where CUDA's dominance is strongest and economic incentives favor established platforms—AMD's share remains negligible. In enterprise deployments, AMD is making inroads as companies value supplier diversification and are sophisticated enough to handle platform-specific optimization.

Geographic variations exist as well. AMD has found more success in markets where NVIDIA supply has been most constrained or where customers particularly value avoiding single-supplier dependence. U.S. hyperscalers lead AMD adoption, while adoption in China—where U.S. export restrictions complicate the market—follows different patterns.

The Verdict: Serious Challenge or Distant Second?

So is AMD a serious challenge to NVIDIA, or merely a distant second that will perpetually trail the leader?

The evidence suggests AMD has become a serious, credible challenger in specific segments, even if it's not yet threatening NVIDIA's overall dominance. For large organizations with sophisticated AI teams, substantial hardware budgets, and desire for supplier diversity, AMD now offers a viable alternative. The MI300 series provides competitive performance, significant memory advantages, and attractive economics. Major adoptions by Microsoft, Meta, and others validate the technology's capabilities.

However, AMD is not yet—and may never be—a universal substitute for NVIDIA across all use cases and customer types. For AI startups, researchers, and organizations without specialized AI infrastructure teams, NVIDIA remains the path of least resistance. The software ecosystem advantages NVIDIA enjoys from CUDA are real, substantial, and difficult to overcome completely.

The most likely outcome is that AMD carves out a meaningful position in the AI accelerator market without displacing NVIDIA as the leader. AMD might reach 20-25% market share at maturity, capturing customers for whom its technical advantages align with their needs, its pricing justifies the software friction, and diversification benefits outweigh single-supplier convenience.

This outcome—becoming a strong second with significant market share but not the leader—would actually represent substantial success for AMD. The AI accelerator market is growing so rapidly that 20% of the market represents tens of billions in annual revenue. AMD would establish a sustainable position in one of technology's most important growth markets while providing the industry with valuable competition that drives innovation and constrains NVIDIA's pricing power.

Strategic Implications

For investors, AMD's AI ambitions present interesting opportunities and risks. The company's data center and AI revenue is growing rapidly, and success in AI accelerators could transform AMD's financial profile. However, the company faces execution challenges, intense competition, and the reality that matching NVIDIA requires sustained investment that may pressure near-term profitability.

For customers evaluating AI infrastructure, AMD deserves serious consideration rather than reflexive NVIDIA selection. Organizations deploying at scale, with technical sophistication to optimize for AMD platforms, and valuing supplier diversification should evaluate AMD offerings thoroughly. The MI300 series provides genuine competitive advantages in specific dimensions like memory capacity and pricing.

For NVIDIA, AMD represents the most credible competitive threat in AI accelerators. While unlikely to dethrone NVIDIA soon, AMD forces NVIDIA to compete on multiple dimensions—pricing, features, supply availability—that might otherwise receive less attention from a monopolist. This competition ultimately benefits customers and accelerates innovation.

For the industry broadly, AMD's emergence as a viable alternative creates important redundancy in the AI supply chain. Reducing dependence on a single accelerator supplier improves resilience, provides negotiating leverage, and ensures that supply constraints or other issues affecting one supplier don't cripple the entire AI industry.

Looking Ahead: The Next Chapters

AMD's AI ambitions will play out over years, not quarters. The MI300 series represents a moment in this ongoing competition, not the final word. Both AMD and NVIDIA will release next-generation products, continue investing in software, and compete for customers, talent, and manufacturing capacity.

Key factors to watch:

Software ecosystem evolution: If ROCm continues improving and closing gaps with CUDA, AMD's addressable market expands. If the gap persists or widens, AMD remains limited to customers willing to accept software friction.

Customer success stories: As early AMD adopters like Microsoft and Meta gain experience, their experiences—whether positive or negative—will influence broader market perceptions. Public endorsements or quiet shifts back to NVIDIA would both carry weight.

Next-generation hardware: AMD's roadmap beyond MI300 and NVIDIA's Blackwell/next-gen offerings will determine whether AMD can sustain competitiveness or if NVIDIA pulls ahead again. One generation of competitive products doesn't guarantee the next will match up as well.

Supply chain dynamics: As TSMC and other foundries expand capacity, supply constraints that gave AMD opportunities may ease, potentially allowing NVIDIA to satisfy all demand. Alternatively, continued strong AI growth could keep supply tight, maintaining AMD's availability advantages.

Market expansion beyond training: As AI inference workloads grow and new AI applications emerge beyond large language model training, AMD's MI300A and other specialized offerings might find new niches where they excel relative to NVIDIA's general-purpose accelerators.

Conclusion

AMD's AI ambitions have progressed from long-shot challenge to serious competitive position. The company has developed genuinely impressive hardware in the MI300 series, invested substantially in its software ecosystem, secured major customer wins, and demonstrated that credible alternatives to NVIDIA can exist in AI acceleration.

Is AMD a serious challenge to NVIDIA? Yes, for customers who can leverage AMD's strengths and work around its limitations. Will AMD displace NVIDIA as the dominant leader? Almost certainly not in the foreseeable future. The more nuanced reality is that AMD has established itself as the clear number two in AI accelerators, a position that's both important and sustainable.

For the AI industry, this is positive. Competition drives innovation, improves pricing, and creates resilience. NVIDIA's dominance isn't going away, but AMD ensures it won't be absolute. As artificial intelligence transforms the economy, having multiple capable accelerator suppliers benefits everyone—customers who gain choices and negotiating power, innovators who can access necessary hardware, and the broader ecosystem that's less vulnerable to single points of failure.

AMD's AI story is far from finished. The next chapters will determine whether the company can sustain its competitive position, potentially expand it, or ultimately find itself outpaced again by NVIDIA's innovation and ecosystem advantages. But AMD has already accomplished something significant: making the AI accelerator market genuinely competitive for the first time in years.

This analysis is for informational purposes only and does not constitute investment advice. The technology sector evolves rapidly, and competitive positions can shift quickly.

Sources & References

- 1. "AMD MI300 Series Specifications" - AMD Official Documentation

- 2. "AMD Financial Reports and Investor Relations" - AMD Corporation

- 3. "ROCm Platform Development" - AMD Developer Resources

- 4. "Comparing AI Accelerators" - AnandTech and Tom's Hardware

- 5. "Data Center GPU Market Analysis" - Jon Peddie Research

- 6. Various semiconductor industry reports from SemiAnalysis, The Linley Group, and TechInsights

- 7. Partnership announcements from Microsoft, Meta, and Oracle press releases

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Markets and competitive dynamics can change rapidly in the technology sector. Taggart is not a licensed financial advisor and does not claim to provide professional financial guidance. Readers should conduct their own research and consult with qualified financial professionals before making investment decisions.

Taggart Buie

Writer, Analyst, and Researcher