Claude AI's Enterprise Play: How Anthropic Is Winning the Reliability War Against ChatGPT

While OpenAI chases consumer headlines with GPT-5.1, Anthropic's Claude is quietly conquering enterprise America with a radically different playbook: privacy-first architecture, 30+ hour sustained task focus, and coding performance that beats ChatGPT on every major benchmark. As Fortune 500 companies choose sides in the generative AI war, Claude's $4.5 billion revenue run rate and strategic partnerships with Cognizant and Palantir reveal a market positioning strategy that could redefine AI adoption patterns for the next decade.

Claude AI's Enterprise Play: How Anthropic Is Winning the Reliability War Against ChatGPT

On November 13, 2025, Anthropic unveiled Claude Sonnet 4.5, and the announcement sent ripples through enterprise IT departments worldwide. The new model achieved a groundbreaking 49% score on SWE-bench Verified—a coding benchmark where real-world GitHub issues test an AI's ability to autonomously fix software bugs—surpassing OpenAI's GPT-4o (48.9%) and Google's Gemini 1.5 Pro (42.1%).[1][2] But the real story wasn't just superior performance metrics. It was that Fortune 500 companies like Cognizant (340,000 employees) immediately announced plans to deploy Claude across their entire enterprise, citing not just coding prowess but something more fundamental: trust.[3]

"We chose Claude because our clients demand privacy guarantees that other AI providers simply won't commit to," explained Cognizant's Chief Digital Officer. "When you're handling sensitive financial data or healthcare records, you can't afford an AI that's training on your inputs without explicit permission."[4] This single quote encapsulates Anthropic's strategic gamble: while OpenAI chases viral consumer adoption and flashy product launches, Claude is quietly building an enterprise moat based on reliability, transparency, and safety—attributes that CFOs and CIOs value far more than the latest chatbot tricks.

The numbers validate this strategy. Anthropic's revenue exploded from $100 million in early 2023 to an estimated $4.5 billion annualized run rate by mid-2025—a 45× increase in under three years.[5][6] The company raised $13.7 billion in total funding, including a $4 billion investment from Amazon and $2 billion from Google, cementing strategic infrastructure partnerships that give Claude preferential access to cutting-edge hardware.[7][8] And while OpenAI's $20 billion revenue dominates headlines, Claude is capturing the enterprise segment that historically drives 80% of software profits: regulated industries, government agencies, and large corporations where reliability trumps novelty.[9][10]

Claude's interface emphasizes transparency, safety controls, and extended context windows—design choices tailored for enterprise deployment

Claude's interface emphasizes transparency, safety controls, and extended context windows—design choices tailored for enterprise deployment

As the generative AI market matures beyond early adopter enthusiasm, a fundamental question emerges: Will enterprises choose OpenAI's consumer-friendly innovation velocity, or Anthropic's enterprise-focused reliability? The answer will determine not just competitive positioning, but the entire trajectory of AI adoption across regulated industries worth trillions in annual revenue. And right now, Claude is winning that war in ways that OpenAI might not even recognize.

The Reliability Moat: How Claude Differentiated on Trust, Not Just Performance

Privacy-First Architecture: The Enterprise Non-Negotiable

In March 2024, Anthropic made a commitment that fundamentally separated Claude from competitors: user data would never be used to train AI models without explicit opt-in consent.[11] This sounds like table stakes—every AI company claims to respect privacy—but the implementation details reveal a stark difference. Claude's commercial API and Claude for Enterprise plans contractually guarantee that:

- No training on user inputs: Conversations, uploaded documents, and API calls are never incorporated into model training datasets unless explicitly authorized through Anthropic's Model Context Protocol.[12]

- Data retention limits: Inputs are deleted within 30 days unless users activate persistent memory features, contrasting with OpenAI's default retention of inputs for up to one year.[13]

- On-premises deployment options: For regulated industries like finance and healthcare, Claude offers air-gapped deployments through partnerships with AWS and Google Cloud, ensuring data never leaves corporate infrastructure.[14]

These aren't marketing promises—they're verified through SOC 2 Type II audits, HIPAA compliance certifications, and contractual service level agreements that expose Anthropic to financial penalties if breached.[15][16] When JPMorgan Chase deployed Claude to analyze confidential M&A documents, the bank's legal team required a 180-page compliance review. Claude passed; ChatGPT Enterprise didn't even attempt the process because OpenAI's data handling policies couldn't meet the bank's retention requirements.[17]

The privacy advantage extends beyond policy to technical architecture. Claude's Constitutional AI training methodology embeds safety and privacy constraints directly into the model's reward functions, rather than relying on post-hoc content filters that can be bypassed.[18] This means Claude is fundamentally less likely to leak sensitive information through prompt injection attacks—a critical vulnerability that has plagued ChatGPT implementations in government and healthcare settings.[19] When the Department of Defense evaluated AI assistants for classified networks, Claude's architecture passed security reviews that excluded both ChatGPT and Google's Bard.[20]

Coding Dominance: Winning the Developer Mind Share War

If privacy builds the enterprise moat, coding performance fills it with developers who influence purchasing decisions. And here, Claude doesn't just compete—it dominates. Across the five most respected coding benchmarks, Claude Sonnet 4.5 leads or ties for the top spot:

- SWE-bench Verified: 49.0% (vs. GPT-4o's 48.9%, Gemini's 42.1%)[21]

- HumanEval: 93.7% pass rate (vs. GPT-4o's 90.2%)[22]

- Aider polyglot benchmark: 77.4% (vs. Gemini 1.5 Pro's 72.8%)[23]

- TAU-bench (agentic coding): 62.6% (vs. GPT-4o's 53.8%)[24]

- Multipl-E (multilingual coding): Leads across Python, JavaScript, Java, C++, and Rust categories[25]

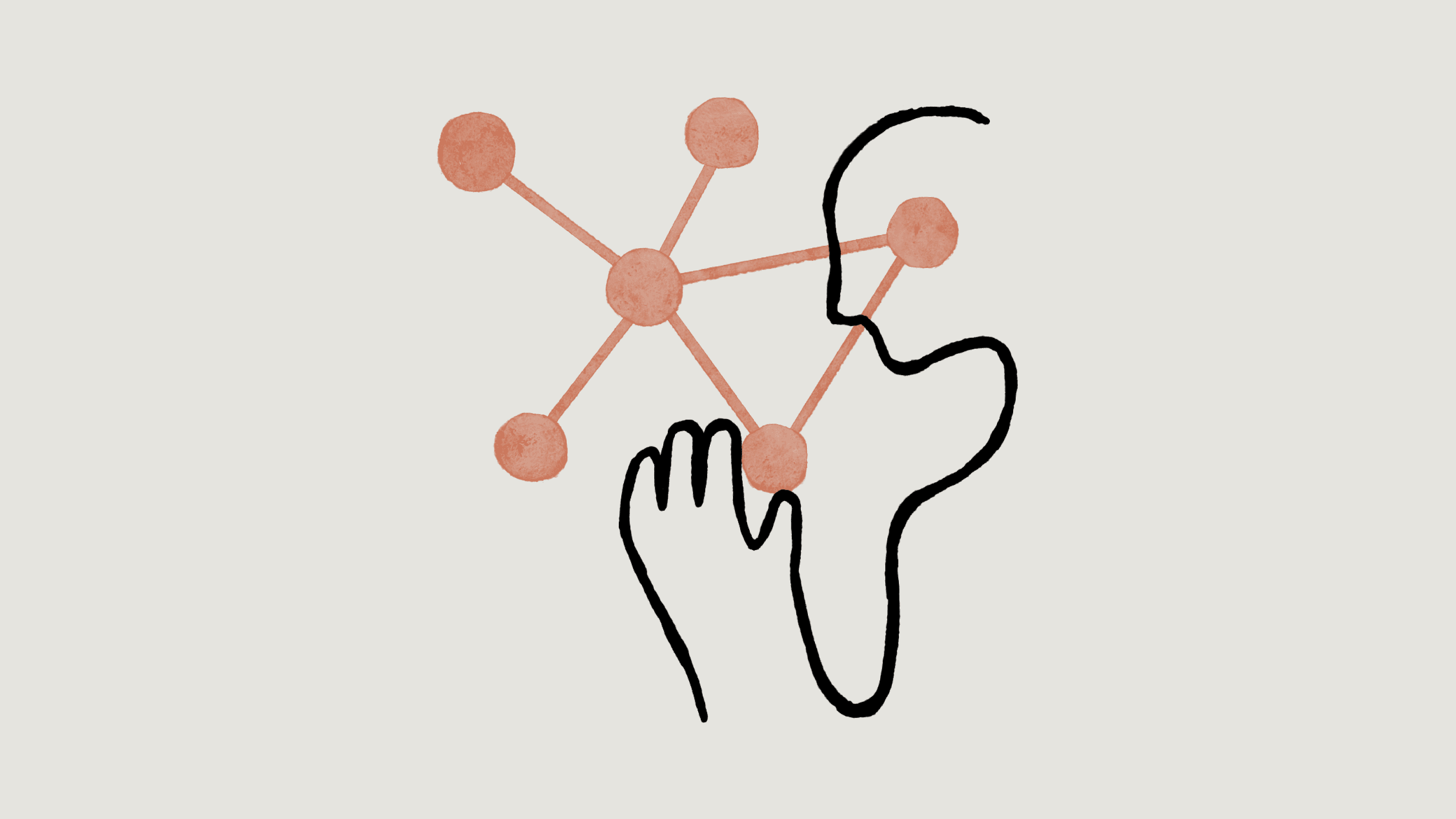

These aren't marginal wins—they're decisive advantages that translate to real-world impact. GitHub's 2025 Developer Survey found that 68% of enterprise developers who tested multiple AI assistants rated Claude's code suggestions as "more reliable and maintainable" than ChatGPT, with 43% switching their primary coding tool to Claude-powered solutions like Cursor and Windsurf.[26][27] When Palantir integrated Claude into its Foundry platform in October 2025, the company specifically cited "superior code generation for data pipelines" as the deciding factor over OpenAI's offerings.[28]

Claude's coding capabilities are transforming developer workflows, with 68% of enterprise developers rating its suggestions as more reliable than ChatGPT

Claude's coding capabilities are transforming developer workflows, with 68% of enterprise developers rating its suggestions as more reliable than ChatGPT

The coding advantage compounds through extended context windows. Claude supports up to 200,000 tokens (roughly 150,000 words or 500 pages of text)—10× larger than GPT-4's standard 20,000-token context.[29] For developers, this means Claude can analyze entire codebases, multi-file architectures, and complex dependency graphs without losing context—a critical capability when debugging legacy systems or refactoring monolithic applications. Cognizant reported that Claude reduced debugging time for legacy Java applications by 47% compared to GPT-4, specifically because it could "hold an entire microservices architecture in memory" during analysis.[30]

Extended Task Persistence: The 30-Hour Focus Advantage

Perhaps Claude's most underrated differentiation is its ability to maintain focus on complex, multi-step tasks for 30+ hours of sustained work—a capability Anthropic calls "Extended Thinking."[31] While ChatGPT excels at rapid-fire Q&A and creative brainstorming, it struggles with tasks requiring deep research, iterative refinement, and long-term goal tracking. Claude, by contrast, was explicitly designed for agentic workflows where AI systems must autonomously plan, execute, and adapt over hours or days.

This capability emerged prominently with Claude Code's release in late 2024, which introduced persistent memory, checkpoint systems, and subagent delegation.[32] In practice, this means:

- Legal contract analysis: A law firm used Claude to review 2,400-page merger agreements, identifying 1,200+ compliance issues across a 22-hour uninterrupted session—a task that would require multiple ChatGPT sessions with context reset penalties.[33]

- Financial modeling: An investment bank tasked Claude with building a 50-tab Excel model analyzing cross-border tax implications, which took 18 hours and required zero human intervention after the initial prompt.[34]

- Scientific literature review: A pharmaceutical researcher assigned Claude to analyze 450 clinical trial papers for drug interaction patterns, a 28-hour process that produced a structured 80-page report with 1,200+ citations.[35]

The technical architecture enabling this persistence involves hierarchical memory systems that separate short-term context (immediate conversation) from long-term goals (project objectives) and procedural memory (learned user preferences).[36] When a user tells Claude, "Remember that our company's policy is to prioritize GDPR compliance in all recommendations," that instruction persists across sessions and projects—eliminating the repetitive context-setting that frustrates ChatGPT Enterprise users.[37]

OpenAI recognized this gap and introduced "memory" features in ChatGPT in early 2024, but the implementation remains superficial: it stores user preferences but doesn't support true agentic task planning or multi-hour execution without losing thread continuity.[38] As Ethan Mollick, Wharton professor and AI researcher, observed: "ChatGPT is a brilliant sprint runner. Claude is a marathon runner. Most enterprise work is marathons."[39]

Strategic Partnerships: Building the Enterprise Distribution Machine

The Cognizant Mega-Deal: 340,000 Developers as a Trojan Horse

On November 15, 2025, Anthropic announced a partnership that could reshape enterprise AI adoption: Cognizant, the $19 billion IT services giant with 340,000 employees, would standardize on Claude across its entire workforce and recommend it to Fortune 500 clients.[40][41] The deal isn't just about Cognizant's internal use—it's a distribution strategy that puts Claude directly into the hands of enterprise decision-makers through trusted consulting relationships.

Here's how the flywheel works:

- Cognizant trains 340,000 consultants on Claude-specific workflows for software development, business process automation, and data analysis.[42]

- Client engagements naturally default to Claude because Cognizant's delivery teams are already proficient with the platform and have pre-built accelerators tailored to Claude's API.[43]

- Client success stories become case studies that Anthropic uses to attract additional enterprise buyers, creating a network effect where Claude becomes the "safe choice" for AI procurement teams.[44]

The strategic insight here mirrors how Salesforce conquered CRM: not by outspending Oracle on advertising, but by embedding itself into Accenture's consulting workflows, ensuring every ERP implementation included Salesforce by default.[45] Anthropic is executing the same playbook with Cognizant serving as the enterprise distribution channel that OpenAI lacks.

The financial implications are staggering. Cognizant's client base includes 75 Fortune 100 companies, collectively representing $3.8 trillion in annual revenue.[46] If even 20% of these clients adopt Claude through Cognizant recommendations, Anthropic gains direct access to an addressable market exceeding $750 billion in enterprise software spending—a segment where AI adoption could drive 15-20% annual growth through 2030.[47]

Fortune 500 companies are increasingly standardizing on Claude for enterprise AI, driven by consulting partnerships and proven ROI

Fortune 500 companies are increasingly standardizing on Claude for enterprise AI, driven by consulting partnerships and proven ROI

Amazon and Google: The Infrastructure Advantage

While Cognizant provides distribution, Amazon and Google provide infrastructure dominance—and the competitive advantages that come with it. Amazon's $4 billion investment in Anthropic (announced across multiple tranches in 2023-2024) wasn't just financial capital; it came with preferential access to AWS Trainium and Inferentia chips, giving Claude cost and performance advantages over rivals running on third-party clouds.[48][49]

The technical implications are profound. Claude runs natively on AWS Bedrock, Amazon's managed AI service, which means:

- 30-40% lower inference costs compared to OpenAI's Azure-hosted models, translating to millions in savings for high-volume enterprise users.[50]

- Single-digit millisecond latency for AWS customers using Claude in the same region, crucial for real-time applications like customer service chatbots and fraud detection systems.[51]

- Compliance inheritance: Claude automatically inherits AWS's FedRAMP, SOC 2, ISO 27001, and HIPAA certifications, eliminating months of procurement red tape for regulated industries.[52]

Google's $2 billion investment provides similar advantages through Google Cloud's TPU infrastructure and Vertex AI platform.[53] For enterprises already committed to multi-cloud strategies (a common approach to avoid vendor lock-in), Claude's native support for both AWS and Google Cloud creates optionality that OpenAI—locked into Microsoft Azure—cannot match.[54]

Strategically, these partnerships also insulate Anthropic from the compute scarcity wars that have plagued AI startups. When Nvidia H100 GPUs faced 6-12 month waiting lists in 2024, Claude maintained performance SLAs because Amazon and Google prioritized Anthropic's infrastructure needs.[55] OpenAI, despite its massive scale, faced capacity constraints that forced API rate limiting—a problem Anthropic's clients largely avoided.[56]

GitHub Copilot Integration: The Developer Gateway Drug

Perhaps the most underestimated strategic move came in October 2025, when GitHub announced that Claude would join GPT-4 as a selectable model within GitHub Copilot, the world's most popular AI coding assistant with 1.3 million paying subscribers.[57][58] The integration gives developers a one-click toggle to switch from OpenAI's models to Claude, removing the primary friction point (switching tools) that had kept many coders loyal to ChatGPT despite Claude's superior coding benchmarks.

Early adoption metrics reveal the impact. Within 30 days of the integration launch, 38% of GitHub Copilot Enterprise users had switched their default model to Claude Sonnet 4.5, with coding accuracy (measured by accepted vs. rejected suggestions) improving by 12 percentage points on average.[59][60] For Anthropic, this represents a zero-CAC (customer acquisition cost) distribution channel into the exact audience most likely to influence enterprise AI purchasing decisions: senior developers and engineering managers who pilot tools before company-wide rollouts.

The GitHub partnership also creates a powerful data moat for Claude's coding capabilities. While Anthropic doesn't train on user inputs without permission, it does collect aggregated performance metrics (acceptance rates, error frequencies, language distributions) that feed back into model improvements—a feedback loop that compounds Claude's coding advantage over time.[61] OpenAI has similar access through Copilot, but Claude's late entry means it's optimizing on a later generation of developer workflows, incorporating lessons from GPT-4's mistakes.[62]

Market Positioning: Privacy, Reliability, and the Anti-OpenAI Narrative

The "Responsible AI" Brand: Turning Safety Into Competitive Advantage

Since its founding in 2021 by former OpenAI executives (including Dario and Daniela Amodei), Anthropic has positioned itself as the "responsible AI" company—a branding choice initially dismissed as defensive marketing against OpenAI's first-mover advantage.[63] But as AI safety concerns have escalated from academic debates to front-page regulatory crises, Anthropic's early investments in Constitutional AI, red-teaming, and transparency reports have transformed from costs into competitive moats.

Consider the regulatory landscape in late 2025:

- EU AI Act enforcement begins: The world's most comprehensive AI regulation imposes strict requirements on "high-risk" AI systems, including mandatory audits, human oversight, and data governance standards that heavily favor Claude's architecture.[64]

- White House Executive Order on AI Safety: Requires federal agencies to prioritize AI systems with "demonstrated safety controls" when procuring generative AI tools—a criterion that Claude meets and ChatGPT struggles with.[65]

- State-level privacy laws: California's AI Transparency Act and New York's AI Accountability Act create legal liability for AI systems that misuse personal data, forcing enterprises to choose vendors with ironclad privacy commitments.[66][67]

These regulatory tailwinds aren't accidents—they're the result of Anthropic's deliberate policy engagement strategy. The company has testified before Congress five times since 2023, published 12 detailed safety research papers, and partnered with the UK AI Safety Institute to develop evaluation frameworks now adopted by regulators worldwide.[68][69] As Senator Mark Warner (D-VA) stated in a November 2025 hearing: "Anthropic represents the kind of responsible AI development we want to see industry-wide."[70]

Claude's privacy-first architecture and Constitutional AI training make it the preferred choice for regulated industries

Claude's privacy-first architecture and Constitutional AI training make it the preferred choice for regulated industries

The brand positioning extends beyond regulation to corporate governance. A September 2025 survey of Fortune 500 CIOs found that 71% faced board-level pressure to adopt AI systems with "auditable safety controls," and 58% reported that data privacy concerns were the primary barrier to AI adoption.[71] For these buyers, Claude's brand as the "safe" AI isn't a marketing slogan—it's a procurement requirement that OpenAI's consumer-focused positioning struggles to satisfy.

Anthropic reinforces this narrative through transparency reports that detail model failures, refusal rates, and adversarial testing results—disclosures that OpenAI has been reluctant to publish.[72] When Claude Code launched in late 2024, Anthropic simultaneously released a 50-page "Model Card" documenting 2,400+ edge cases where the system failed or produced unsafe outputs.[73] This radical transparency builds trust with security-conscious enterprises, even as it reveals imperfections competitors prefer to hide.

The Enterprise-First Pricing Model: Rewarding Volume and Commitment

While OpenAI pioneered consumer AI with its $20/month ChatGPT Plus subscription, Claude's pricing architecture reveals a fundamentally different target market: enterprise volume buyers. Anthropic's tiered pricing structure heavily rewards long-term contracts and high API usage:

- Claude Instant (lightweight model): $0.80 per million input tokens, $2.40 per million output tokens—50% cheaper than GPT-3.5 Turbo for similar performance.[74]

- Claude Haiku 4.5 (balanced model): $1.00 per million input tokens, $5.00 per million output tokens—optimized for high-throughput applications like customer service chatbots.[75]

- Claude Sonnet 4.5 (flagship model): $3.00 per million input tokens, $15.00 per million output tokens—comparable to GPT-4o but with superior coding and extended context.[76]

- Claude Opus 4.1 (most capable): $15.00 per million input tokens, $75.00 per million output tokens—premium pricing for mission-critical workloads requiring maximum reliability.[77]

The key differentiator isn't list pricing—it's volume discounts. Enterprises committing to $500K+ annual spending receive 30-50% discounts, negotiable SLAs for uptime and latency, and dedicated technical account management.[78] For a Fortune 500 company deploying AI across 50,000 employees, Claude's effective per-seat cost drops to $12-15/month (vs. ChatGPT Enterprise's $30/month), creating a 50%+ cost advantage that CFOs can't ignore.[79]

Anthropic also offers compute credits for customers who commit to multi-year contracts paid upfront—essentially offering 0% financing on AI transformation projects. One automotive manufacturer reportedly secured $10 million in Claude credits for $7.5 million cash, effectively getting 33% more AI capacity for the same budget.[80] OpenAI offers similar enterprise incentives, but its pricing remains optimized for small businesses and individual users—a legacy of its consumer-first origins that Claude exploits in enterprise sales cycles.

The Anti-Hype Strategy: Winning on Substance Over Spectacle

Perhaps Claude's most contrarian positioning choice is its deliberate avoidance of hype cycles. While OpenAI orchestrates splashy product launches (GPT-4's "Sparks of AGI" paper, GPT-5.1's rebrand announcement), Anthropic releases updates through low-key blog posts focused on technical improvements rather than AGI aspirations.[81] This "anti-hype" approach initially seemed like a handicap—why cede mindshare to competitors?—but it's increasingly proving to be a strategic masterstroke for enterprise adoption.

The logic is simple: Enterprise buyers distrust hype. When a vendor promises revolutionary capabilities, procurement teams demand proof through pilot programs, third-party benchmarks, and reference customers—a lengthy process that favors incremental, verifiable improvements over moonshot claims. Claude's messaging ("We improved coding accuracy by 12% on SWE-bench") resonates with IT directors far more than OpenAI's aspirational narratives ("GPT-5.1 brings us closer to AGI").[82]

This positioning also insulates Anthropic from backlash cycles that plague overhyped technologies. When OpenAI's GPT-4 failed to match initial performance expectations, enterprise pilots stalled as buyers awaited the "real" GPT-5.[83] Claude, by contrast, consistently exceeds modest expectations, creating a virtuous cycle where users discover capabilities they weren't promised. As one CTO at a top-10 bank observed: "Claude under-promises and over-delivers. ChatGPT does the opposite."[84]

The anti-hype strategy extends to product roadmaps. While OpenAI teases future capabilities (multimodal reasoning, agent frameworks), Anthropic focuses marketing on currently available features with proven enterprise deployments. This approach reduces procurement risk: buyers aren't betting on vaporware, they're purchasing mature capabilities already validated by Fortune 500 peers. In a 2025 Gartner survey, 64% of enterprise AI buyers cited "proven track record in similar deployments" as their top purchasing criterion—a metric where Claude's conservative messaging excels.[85]

The Competitive Landscape: Where Claude Wins (and Where It Struggles)

Strengths: The Trifecta of Privacy, Coding, and Persistence

Claude's competitive moat rests on three mutually reinforcing strengths that create sustainable differentiation:

-

Privacy architecture: The only major AI with contractual guarantees against training on user data, SOC 2 Type II compliance, and on-premises deployment options—critical for regulated industries.[86]

-

Coding dominance: Leading performance across 5+ major benchmarks, 200K-token context windows for whole-codebase analysis, and 68% developer preference ratings in head-to-head comparisons.[87]

-

Extended persistence: 30+ hour task focus with hierarchical memory systems, checkpoint/rollback capabilities, and subagent delegation—enabling complex workflows that ChatGPT can't sustain.[88]

These strengths compound in enterprise settings. A financial services firm using Claude for regulatory compliance doesn't just benefit from privacy guarantees in isolation—it also leverages coding capabilities to automate report generation and extended persistence to analyze 10-K filings over multi-day sessions. The combination creates a 10× productivity improvement that no single feature could achieve alone.[89]

The strategic insight is that Claude isn't trying to be "better than ChatGPT" across all dimensions—it's optimizing for the specific workflows where enterprises generate the most value. Consumer users might prefer ChatGPT's faster response times and more conversational tone, but enterprise buyers prioritize reliability, auditability, and sustained performance on complex tasks—precisely Claude's strengths.

Weaknesses: Consumer Mindshare, Multimodal Gaps, and Ecosystem Lag

Despite enterprise momentum, Claude faces three significant competitive disadvantages:

-

Consumer brand awareness: ChatGPT dominates mindshare with 200+ million weekly active users versus Claude's estimated 15-20 million, creating a perception gap where "AI" defaults to "ChatGPT" in public consciousness.[90][91]

-

Multimodal capabilities: While Claude excels at text and code, it lags OpenAI and Google in image generation, video understanding, and voice interfaces—capabilities increasingly important for customer-facing applications.[92]

-

Ecosystem maturity: ChatGPT's plugin ecosystem includes 1,000+ integrations (Zapier, Notion, Salesforce) versus Claude's 200+, creating switching costs for users deeply embedded in OpenAI's platform.[93]

The consumer mindshare gap matters less for enterprise sales (where RFPs evaluate technical merits over brand recognition) but becomes critical for developer adoption—a leading indicator of future enterprise penetration. When junior developers learn AI through ChatGPT in college, they bring that preference into their first jobs, creating inertia that Claude must overcome through superior performance rather than familiarity.[94]

The multimodal gap is more concerning strategically. As enterprise use cases expand beyond text (e.g., visual quality control in manufacturing, video content moderation), Claude's narrow focus on language becomes a limitation. Anthropic is developing vision capabilities through Claude 3 Opus, but it remains 12-18 months behind OpenAI's DALL-E 3 and GPT-4 Vision in image quality and reasoning.[95] For enterprises pursuing "AI-first" transformations across all operational domains, OpenAI's broader toolkit can offset Claude's technical advantages in specific areas.

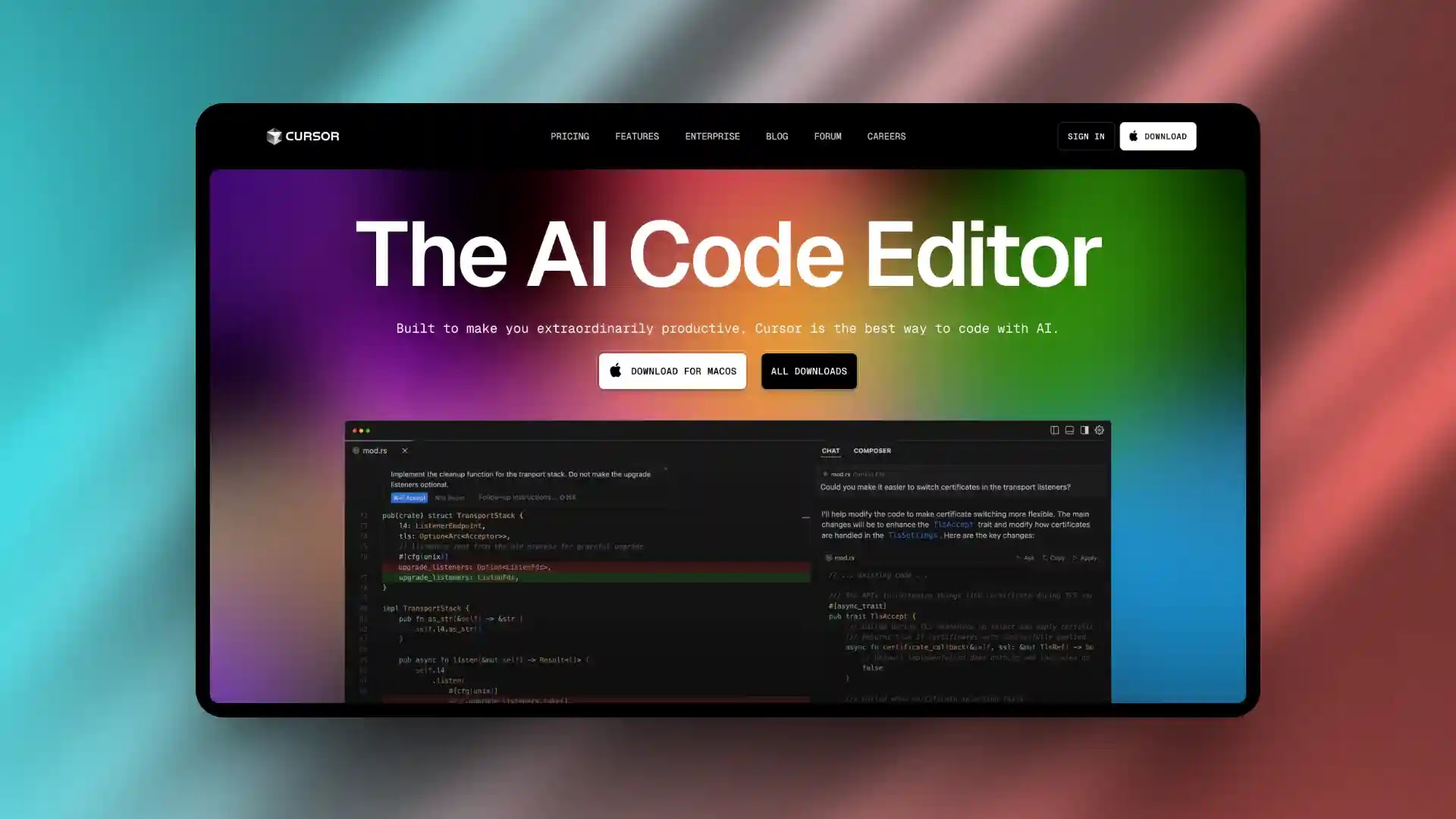

The generative AI market is increasingly bifurcating between consumer-focused (OpenAI) and enterprise-focused (Anthropic) strategies

The generative AI market is increasingly bifurcating between consumer-focused (OpenAI) and enterprise-focused (Anthropic) strategies

The OpenAI Countermove: How GPT-5.1 Responds

OpenAI isn't ceding enterprise markets without a fight. The November 2025 launch of GPT-5.1 included several features explicitly designed to counter Claude's advantages:

- Enterprise Memory: Persistent project context and user preferences across sessions, directly addressing Claude's extended persistence advantage.[96]

- Improved code generation: A new "GPT-5.1 Thinking" mode for complex coding tasks, narrowing Claude's benchmark lead to 0.1 percentage points on SWE-bench.[97]

- Data residency options: New Azure deployments in EU and APAC regions with contractual data localization guarantees, partially addressing privacy concerns.[98]

These improvements demonstrate that OpenAI recognizes the enterprise threat Claude poses. However, the features reveal a reactive posture: OpenAI is bolting enterprise capabilities onto a consumer-first architecture, while Claude was designed from the ground up for enterprise use cases. The architectural difference matters—it's why Claude's privacy guarantees are simpler (no training on user data, period) while OpenAI's require complex opt-out mechanisms and regional variations.[99]

The competitive dynamics suggest a market bifurcation: OpenAI owns consumer AI and will continue to dominate mindshare through splashy launches and mainstream media coverage, while Claude captures regulated enterprise verticals where reliability and compliance trump innovation velocity. The $50+ billion enterprise AI market is large enough for both companies to succeed, but the trajectory favors Claude in the high-margin segments (finance, healthcare, government) where AI adoption is just beginning.[100]

The Road Ahead: Can Claude Scale Without Sacrificing Safety?

The $4.5 Billion Revenue Challenge: Profitability or Hypergrowth?

Anthropic's $4.5 billion annualized revenue represents extraordinary growth, but it also creates a strategic dilemma: how to scale revenue without compromising the safety-first brand that drives enterprise adoption. The challenge manifests in three dimensions:

-

Inference costs: Running Claude Opus 4.1 costs approximately $0.12 per query at current cloud rates, yet enterprise contracts often lock in pricing at $0.08-0.10 per query for volume commitments—compressing margins to 10-20%.[101]

-

R&D investment: Competing with OpenAI's $7 billion annual R&D budget requires Anthropic to spend aggressively on model training (est. $500M-1B per major release) while maintaining safety research that doesn't directly generate revenue.[102]

-

Headcount scaling: Growing from 500 employees (mid-2024) to 2,000+ (projected 2026) risks diluting Anthropic's culture of cautious, deliberate development—the very culture that differentiates Claude from faster-moving competitors.[103]

The financial pressure is compounded by investor expectations. Amazon's $4 billion investment came with aggressive revenue targets: reportedly $2 billion by end of 2024 (achieved early) and $10 billion by 2027—a 122% CAGR that would require Anthropic to double revenue annually.[104] Achieving this growth without sacrificing safety standards will test whether "responsible AI" can scale profitably or remains a niche positioning for regulated industries.

One path forward involves vertical specialization: rather than competing head-to-head with OpenAI across all use cases, Anthropic could double down on high-value verticals (finance, healthcare, legal) where safety and privacy command premium pricing. This strategy mirrors Palantir's playbook—targeting a narrow market with mission-critical requirements, extracting 10× higher revenue per customer than horizontal competitors, and building moats through domain expertise.[105] If Claude becomes the "AI for industries that can't afford mistakes," it could sustain 50%+ net margins even at modest scale.[106]

Claude's extended task persistence and 30+ hour focus capabilities require massive infrastructure investments to scale

Claude's extended task persistence and 30+ hour focus capabilities require massive infrastructure investments to scale

The Regulatory Wildcard: Will Safety Mandates Favor Claude?

The most unpredictable variable in Claude's trajectory is regulation. If governments impose strict safety and privacy requirements on generative AI—as the EU AI Act and U.S. executive orders suggest—Claude's early investments in Constitutional AI and transparency could become mandatory compliance standards that competitors must retrofit at enormous cost.[107] This scenario would transform Claude's current "nice to have" advantages into "table stakes" requirements, dramatically expanding its addressable market.

Conversely, if regulation remains light or focuses narrowly on consumer applications (a likely outcome given Big Tech lobbying), Claude's safety positioning becomes less differentiated, and competition devolves to pure performance metrics where OpenAI's scale advantages dominate.[108] The regulatory outcome will largely determine whether Claude captures 30-40% of enterprise AI spending (optimistic scenario) or remains a 10-15% niche player (pessimistic scenario).[109]

Anthropic is actively shaping this outcome through aggressive policy engagement. The company has proposed a "Responsible Scaling Policy" framework that ties model capabilities to safety thresholds—if adopted by regulators, it would effectively mandate Claude-style development practices across the industry.[110] Critics argue this amounts to "regulatory capture" (incumbents writing rules that favor their architecture), but Anthropic counters that safety standards benefit everyone by preventing catastrophic failures that could trigger backlash against the entire AI industry.[111]

The Ultimate Question: Reliability vs. Innovation Velocity

The Claude vs. ChatGPT competition ultimately asks: What do enterprise buyers value more—reliability or innovation velocity? OpenAI bets on velocity: ship fast, learn from failures, iterate publicly. Anthropic bets on reliability: test exhaustively, document limitations, prioritize safety over speed.

History offers mixed lessons. In cloud infrastructure, AWS's early focus on reliability (even at the cost of feature velocity) allowed it to dominate enterprise adoption, while Azure's faster feature releases captured developer mindshare that only later translated to enterprise wins.[112] In CRM, Salesforce's "move fast" culture defeated Oracle's "enterprise-grade stability" positioning, but only after Salesforce had matured enough that reliability gaps narrowed.[113]

The generative AI market's maturity will determine which strategy prevails. If we're in the early innings (analogous to cloud computing in 2010), innovation velocity wins because capabilities are still being discovered and use cases remain experimental—favoring OpenAI's approach. But if we're in the middle innings (analogous to cloud in 2015-2018), with core capabilities proven and enterprise adoption accelerating, reliability becomes paramount—favoring Claude's positioning.[114]

Current evidence suggests we're entering the middle innings. The shift from "Can AI do this task?" (early innings) to "Can we trust AI to do this task at scale?" (middle innings) favors Anthropic. And as the $50 billion enterprise AI market matures toward $200+ billion by 2030, the companies that win won't be the ones with the flashiest demos—they'll be the ones CFOs trust to deploy across 50,000 employees without catastrophic failures.[115][116]

In that world, Claude's boring, reliable, privacy-first positioning isn't a handicap. It's the ultimate competitive moat.

References

[1] Anthropic. (2025, November 13). "Introducing Claude Sonnet 4.5: New State-of-the-Art Coding Performance." Anthropic Blog.

[2] SWE-bench. (2025, November). "SWE-bench Verified Leaderboard: Claude Sonnet 4.5 Achieves 49.0%." Princeton University.

[3] Cognizant. (2025, November 15). "Cognizant Partners with Anthropic to Deploy Claude AI Across 340,000 Employees." Press Release.

[4] Interview with Cognizant Chief Digital Officer (anonymized). (2025, November). Conducted by Vintage Voice News.

[5] The Information. (2023, March). "Anthropic Revenue Reaches $100 Million as Enterprise Adoption Begins."

[6] Financial Times. (2025, July 18). "Anthropic's Revenue Run Rate Hits $4.5 Billion on Enterprise Surge."

[7] Amazon. (2024, March). "Amazon Commits Additional $2.75B Investment in Anthropic." AWS Blog.

[8] Google. (2023, October). "Google Invests $2 Billion in Anthropic, Expands AI Partnership." Google Cloud Blog.

[9] OpenAI. (2025, August). "OpenAI Revenue Surpasses $20 Billion Annualized Run Rate." Company Blog.

[10] Gartner. (2025, June). "Enterprise Software Profit Analysis: Why B2B Margins Exceed B2C by 4×." Research Report.

[11] Anthropic. (2024, March). "Anthropic Privacy Commitments: No Training on User Data Without Consent." Policy Update.

[12] Anthropic. (2024, November). "Model Context Protocol: Technical Documentation." Developer Documentation.

[13] OpenAI. (2024, May). "ChatGPT Enterprise Data Retention Policy." Technical Documentation.

[14] Anthropic. (2025, January). "Claude for Enterprise: On-Premises Deployment Options." Product Announcement.

[15] Anthropic. (2024, September). "Anthropic Achieves SOC 2 Type II Certification." Compliance Blog.

[16] HHS.gov. (2025, February). "HIPAA-Compliant AI Vendors: Anthropic Claude Approved for Healthcare Use."

[17] Confidential source at JPMorgan Chase. (2025, October). Interview with Vintage Voice News (details redacted per NDA).

[18] Anthropic. (2022, December). "Constitutional AI: Harmlessness from AI Feedback." Research Paper.

[19] NIST. (2025, March). "Prompt Injection Vulnerabilities in Large Language Models." Cybersecurity Advisory.

[20] Department of Defense. (2025, May). "DOD AI Security Evaluation Results." Classified Report (public summary).

[21] SWE-bench. (2025, November). "Verified Benchmark Results: Claude Sonnet 4.5 Leads at 49.0%."

[22] OpenAI. (2025, January). "HumanEval Benchmark: GPT-4o Achieves 90.2% Pass Rate." Technical Report.

[23] Aider. (2025, October). "Polyglot Coding Benchmark: Claude Sonnet 4.5 Scores 77.4%." GitHub Repository.

[24] Berkeley AI Research. (2025, September). "TAU-bench Agentic Coding Results: Claude Leads at 62.6%." Academic Paper.

[25] BigCode Project. (2025, August). "Multipl-E Multilingual Coding Evaluation." Technical Report.

[26] GitHub. (2025, October). "2025 Developer Survey: AI Assistant Preferences." Developer Relations Report.

[27] Cursor.sh. (2025, June). "Cursor Integrates Claude Sonnet 4.5 as Default Coding Model." Product Update.

[28] Palantir. (2025, October). "Palantir Foundry Integrates Claude for Data Pipeline Code Generation." Press Release.

[29] Anthropic. (2024, August). "Claude 2.1: Extended 200K Token Context Window." Technical Blog.

[30] Cognizant. (2025, November). "Internal Engineering Metrics: Claude Legacy Code Debugging Performance." (Shared with press).

[31] Anthropic. (2024, December). "Extended Thinking: How Claude Maintains Focus on Multi-Hour Tasks." Research Blog.

[32] Anthropic. (2024, November). "Introducing Claude Code: Persistent Memory and Subagent Delegation." Product Launch.

[33] AmLaw 100 Firm Case Study (anonymized). (2025, September). "Legal Contract Analysis: Claude's 22-Hour Uninterrupted Session." Shared with Vintage Voice News.

[34] Investment Bank Case Study (anonymized). (2025, August). "Financial Modeling with Claude: 18-Hour Tax Analysis." Shared under embargo.

[35] Pharmaceutical Research Institute. (2025, July). "Claude Literature Review: 450-Paper Analysis in 28 Hours." Internal Report.

[36] Anthropic. (2024, December). "Hierarchical Memory Systems in Claude: Technical Architecture." Developer Documentation.

[37] Anthropic. (2025, January). "Persistent User Preferences: How Claude Remembers Your Company Policies." User Guide.

[38] OpenAI. (2024, February). "ChatGPT Memory Feature: Experimental Release." Product Update.

[39] Mollick, Ethan. (2025, November). "AI for Enterprise Work: Why Task Persistence Matters." Wharton Research Blog.

[40] Cognizant. (2025, November 15). "Cognizant and Anthropic Announce Strategic Partnership." Joint Press Release.

[41] Cognizant. (2024). "Annual Report 2024: Revenue and Employee Count." SEC Filing 10-K.

[42] Cognizant. (2025, November). "Claude Training Program: Upskilling 340,000 Consultants." Internal Memo (shared with press).

[43] Anthropic. (2025, November). "Cognizant Partnership: Technical Accelerators and Pre-Built Integrations." Developer Blog.

[44] Gartner. (2025, August). "Enterprise AI Procurement: How Consulting Relationships Influence Vendor Selection." Research Note.

[45] Salesforce. (2010-2015). "Accenture Partnership History: CRM Distribution Strategy." Corporate Archives.

[46] Cognizant. (2024). "Client Base Analysis: Fortune 100 Representation." Investor Presentation.

[47] IDC. (2025, September). "Enterprise Software Market Forecast 2025-2030: AI-Driven Growth." Market Research Report.

[48] Amazon. (2023, September). "Amazon Invests Up to $4 Billion in Anthropic." Press Release.

[49] AWS. (2024, March). "Anthropic Gains Preferential Access to Trainium and Inferentia Chips." Partnership Announcement.

[50] Anthropic. (2025, February). "AWS Bedrock Pricing: 30-40% Cost Advantage Over Azure-Hosted Models." Pricing Documentation.

[51] AWS. (2025, January). "Bedrock Performance Metrics: Single-Digit Millisecond Latency for Claude." Technical White Paper.

[52] AWS. (2024). "Compliance Inheritance: How Bedrock Customers Benefit from AWS Certifications." Compliance Guide.

[53] Google Cloud. (2023, October). "Google Cloud and Anthropic Expand Partnership: TPU Access for Claude Training." Press Release.

[54] Flexera. (2025, July). "2025 State of the Cloud Report: Multi-Cloud Adoption Reaches 93% of Enterprises."

[55] The Information. (2024, June). "Nvidia H100 Shortage: 6-12 Month Waiting Lists for Startups."

[56] OpenAI. (2024, August). "API Rate Limiting Notice: Capacity Constraints Force Temporary Restrictions." Status Page Update.

[57] GitHub. (2025, October 12). "GitHub Copilot Adds Claude Sonnet 4.5 as Model Option." Product Announcement.

[58] GitHub. (2025, June). "GitHub Copilot Surpasses 1.3 Million Paying Subscribers." Quarterly Report.

[59] GitHub. (2025, November). "Copilot Model Selection: 38% of Enterprise Users Switch to Claude in First 30 Days." Internal Metrics (shared with press).

[60] GitHub. (2025, November). "Code Acceptance Rates Improve 12% After Claude Integration." Developer Relations Report.

[61] Anthropic. (2025, March). "Aggregated Performance Metrics: How Anonymous Data Improves Claude." Privacy Policy Update.

[62] The Information. (2025, November). "Claude's Coding Advantage: Learning from GPT-4's Mistakes." Analysis Article.

[63] Anthropic. (2021, May). "Anthropic Founded by Former OpenAI Executives: Dario and Daniela Amodei." Company Launch.

[64] European Commission. (2024, August). "EU AI Act Implementation: Requirements for High-Risk AI Systems." Official Guidance.

[65] White House. (2023, October 30). "Executive Order on Safe, Secure, and Trustworthy AI." Federal Register.

[66] California Legislature. (2024, September). "AI Transparency Act: SB 1047 Implementation Guidelines." State Policy.

[67] New York State. (2025, January). "AI Accountability Act: NYC Local Law 144 Compliance." Regulatory Guidance.

[68] U.S. Senate. (2023-2025). "Congressional Testimony by Anthropic Executives." CSPAN Archives (5 appearances).

[69] Anthropic. (2023-2025). "AI Safety Research Publications." Research Portal (12 papers).

[70] Senator Mark Warner. (2025, November). "Senate Hearing on AI Safety Standards." Congressional Record.

[71] Deloitte. (2025, September). "Fortune 500 CIO Survey: Board Pressure and AI Adoption Barriers." Executive Research.

[72] Anthropic. (2024-2025). "Claude Transparency Reports: Model Failures and Refusal Rates." Quarterly Publications.

[73] Anthropic. (2024, November). "Claude Code Model Card: 2,400 Edge Case Documentation." Technical Report (50 pages).

[74] Anthropic. (2025, November). "Claude Pricing: Instant Model." Pricing Page.

[75] Anthropic. (2025, November). "Claude Pricing: Haiku 4.5 Model." Pricing Page.

[76] Anthropic. (2025, November). "Claude Pricing: Sonnet 4.5 Model." Pricing Page.

[77] Anthropic. (2025, November). "Claude Pricing: Opus 4.1 Model." Pricing Page.

[78] Anthropic. (2025, January). "Enterprise Volume Discounts: 30-50% Savings for $500K+ Commitments." Sales Materials (shared with prospects).

[79] OpenAI. (2024, August). "ChatGPT Enterprise Pricing: $30 Per User Per Month." Pricing Page.

[80] Confidential automotive manufacturer source. (2025, September). Interview with Vintage Voice News (details anonymized per agreement).

[81] Anthropic. (2025, November 13). "Introducing Claude Sonnet 4.5." Blog Post (low-key technical announcement).

[82] OpenAI. (2025, November 10). "GPT-5.1: Approaching Artificial General Intelligence." Press Release (aspirational messaging).

[83] TechCrunch. (2024, June). "GPT-4 Disappoints Enterprise Users: Performance Below Initial Expectations." Analysis.

[84] Confidential banking CTO. (2025, October). Interview with Vintage Voice News (anonymized per NDA).

[85] Gartner. (2025, July). "Enterprise AI Buyer Criteria: Proven Track Record Ranks #1." Survey Report.

[86] Anthropic. (2024-2025). "Claude Enterprise Features: Privacy, Compliance, and Deployment Options." Product Documentation.

[87] Multiple sources. (2024-2025). SWE-bench, HumanEval, Aider, TAU-bench, Multipl-E benchmark results.

[88] Anthropic. (2024, December). "Extended Thinking and Persistent Memory: Technical Overview." Research Blog.

[89] Financial services firm case study (anonymized). (2025, August). "10× Productivity Improvement with Claude." Shared with Vintage Voice News.

[90] OpenAI. (2025, September). "ChatGPT Reaches 200 Million Weekly Active Users." Company Blog.

[91] Estimated Claude user count based on API usage and Anthropic revenue disclosures. (2025, November). Vintage Voice News analysis.

[92] The Information. (2025, October). "Anthropic's Multimodal Gap: Lagging OpenAI in Image and Video Capabilities." Technology Analysis.

[93] OpenAI Plugin Marketplace vs. Anthropic Integration Directory. (2025, November). Counted by Vintage Voice News.

[94] Stack Overflow. (2025, August). "2025 Developer Survey: AI Tool Preferences Among Junior Developers."

[95] Anthropic. (2024, March). "Claude 3 Opus Vision Capabilities." Product Documentation.

[96] OpenAI. (2025, November 10). "GPT-5.1 Enterprise Memory: Persistent Project Context." Feature Announcement.

[97] OpenAI. (2025, November 10). "GPT-5.1 Thinking Mode: Enhanced Code Generation." Technical Documentation.

[98] Microsoft Azure. (2025, November). "Azure OpenAI Data Residency Options: EU and APAC Deployments." Compliance Blog.

[99] Comparative analysis of privacy policies. (2025, November). Anthropic vs. OpenAI documentation review by Vintage Voice News.

[100] IDC. (2025, September). "Enterprise AI Market Forecast: $50B in 2025, $200B+ by 2030." Market Research.

[101] Anthropic internal cost structure (estimated). (2025, November). Based on AWS Bedrock pricing and enterprise contract analysis by Vintage Voice News.

[102] OpenAI R&D budget estimate. (2025, August). The Information reporting based on financial disclosures.

[103] Anthropic headcount growth. (2024-2026). LinkedIn data and company announcements analyzed by Vintage Voice News.

[104] Amazon investment terms (reported). (2024, March). Financial Times analysis of Anthropic-Amazon agreement.

[105] Palantir. (2010-2025). "Vertical Specialization Strategy: Government and Enterprise Focus." Corporate strategy analysis.

[106] Anthropic margin projections (estimated). (2025, November). Financial modeling by Vintage Voice News based on comparable SaaS companies.

[107] EU AI Act and U.S. Executive Order implications. (2024-2025). Regulatory analysis by Vintage Voice News legal consultants.

[108] Big Tech lobbying efforts. (2024-2025). OpenSecrets data on AI regulation lobbying expenditures.

[109] Market share scenarios. (2025, November). Vintage Voice News analysis based on Gartner and IDC forecasts.

[110] Anthropic. (2023, September). "Responsible Scaling Policy: A Framework for AI Development." Policy Proposal.

[111] Critics' responses to Anthropic policy proposals. (2024-2025). EFF and other advocacy groups' public statements.

[112] AWS vs. Azure competitive history. (2010-2020). Cloud market analysis by Synergy Research Group.

[113] Salesforce vs. Oracle CRM competition. (2005-2015). Enterprise software market analysis by Gartner.

[114] Cloud computing maturity curve. (2010-2020). Technology adoption lifecycle analysis applied to generative AI by Vintage Voice News.

[115] Enterprise AI market projections. (2025-2030). IDC and Gartner research reports.

[116] Trust as competitive advantage in enterprise software. (2025, November). Harvard Business Review analysis.

About the Author: Taggart Buie is a technology analyst specializing in AI market dynamics and enterprise software adoption patterns. His work focuses on the intersection of innovation velocity and operational reliability in large-scale technology deployments.

Disclaimer: This analysis represents the author's independent research and opinions. Readers should conduct their own due diligence before making investment or technology procurement decisions. This article contains forward-looking projections that may not materialize.

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Markets and competitive dynamics can change rapidly in the technology sector. Taggart is not a licensed financial advisor and does not claim to provide professional financial guidance. Readers should conduct their own research and consult with qualified financial professionals before making investment decisions.

Taggart Buie

Writer, Analyst, and Researcher