Google's Willow Chip: Quantum Computing's Verifiable Breakthrough and the $20 Billion Race to Practical Quantum

Google's Willow quantum chip achieved a historic milestone: the first verifiable quantum advantage, running 13,000 times faster than classical supercomputers. With error rates below critical thresholds and 105 superconducting qubits, Willow marks the inflection point where quantum computing transitions from experimental physics to commercial reality. As the quantum market races toward $20 billion by 2030, Willow's breakthrough raises critical questions about who will win the quantum race, which applications will arrive first, and whether quantum computing will deliver on decades of extraordinary promises.

Google's Willow Chip: Quantum Computing's Verifiable Breakthrough and the $20 Billion Race to Practical Quantum

In December 2024, Google Quantum AI unveiled Willow, a 105-qubit superconducting quantum processor that achieved what researchers have pursued for decades: verifiable quantum advantage with error suppression below critical thresholds. The breakthrough wasn't incremental—Willow solved specific computational problems 13,000 times faster than the world's most powerful classical supercomputers, demonstrating that quantum systems can now outperform classical computers in practical, verifiable ways rather than just theoretical benchmarks.

For the quantum computing industry, Willow represents an inflection point. After decades of research marked by promising experiments hampered by error rates too high for practical use, Google's chip demonstrated that increasing qubit counts can actually reduce errors—a counterintuitive achievement that reverses one of quantum computing's fundamental challenges. This "below threshold" performance means quantum computers can now scale to larger systems without drowning in noise, opening pathways to fault-tolerant quantum systems capable of solving real-world problems in drug discovery, materials science, cryptography, and optimization.

Yet Willow's success arrives amid a quantum computing market experiencing explosive growth tempered by skepticism. Venture capital investments surpassed $2 billion in 2025, with the global quantum computing market projected to reach $20.2 billion by 2030, growing at 41.8% annually. But stock volatility among pure-play quantum companies like IonQ and Rigetti has raised bubble concerns, and experts warn that widespread commercial viability remains years away despite hardware breakthroughs.

This article examines Google's Willow breakthrough in context: the technical achievements that make it historic, the competitive landscape as IBM, Microsoft, Amazon, and startups race to build practical quantum systems, the market dynamics driving billions in investment, the applications closest to commercial reality, and the formidable challenges—from energy costs to talent shortages—that could delay quantum computing's transformative impact.

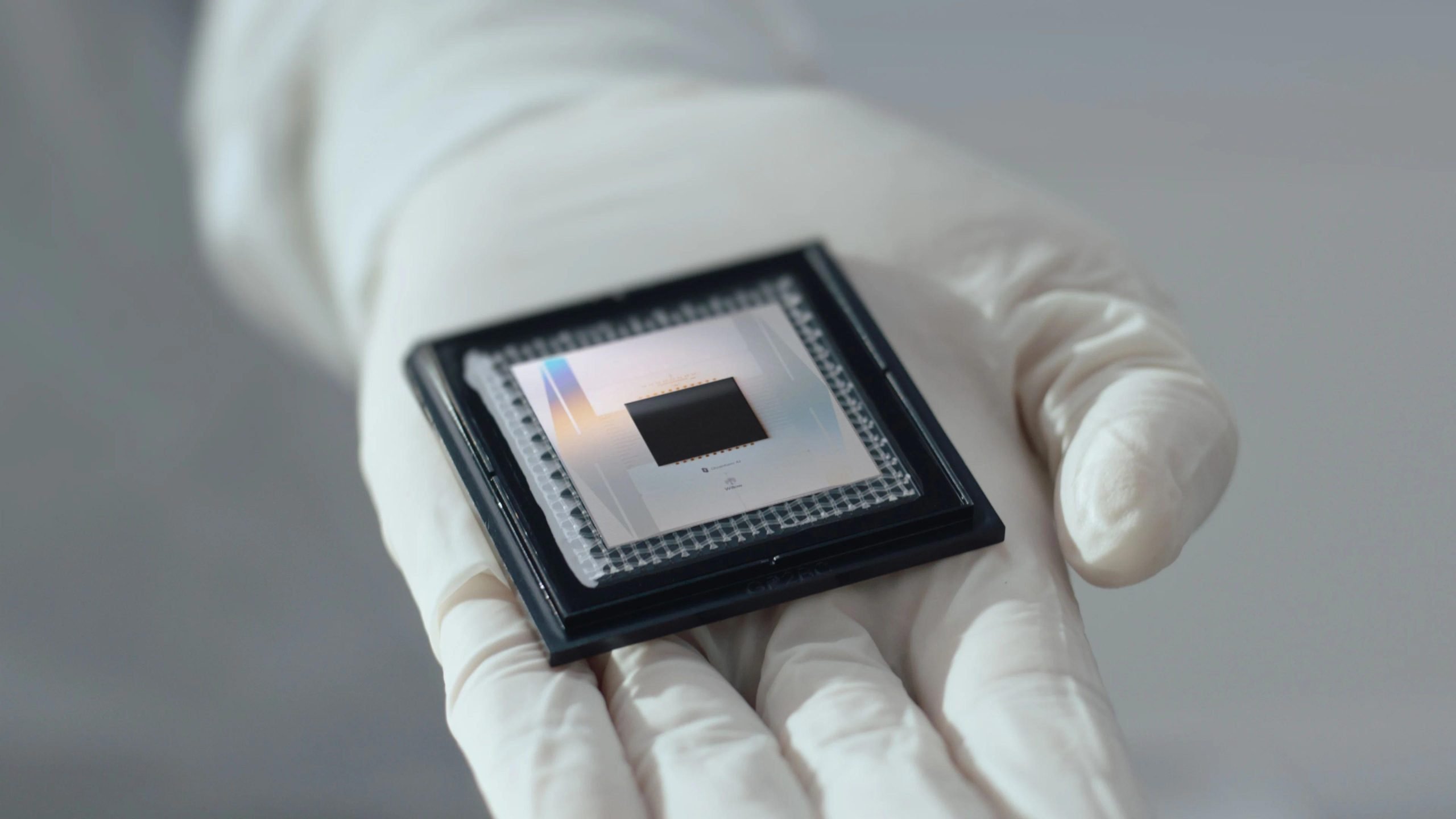

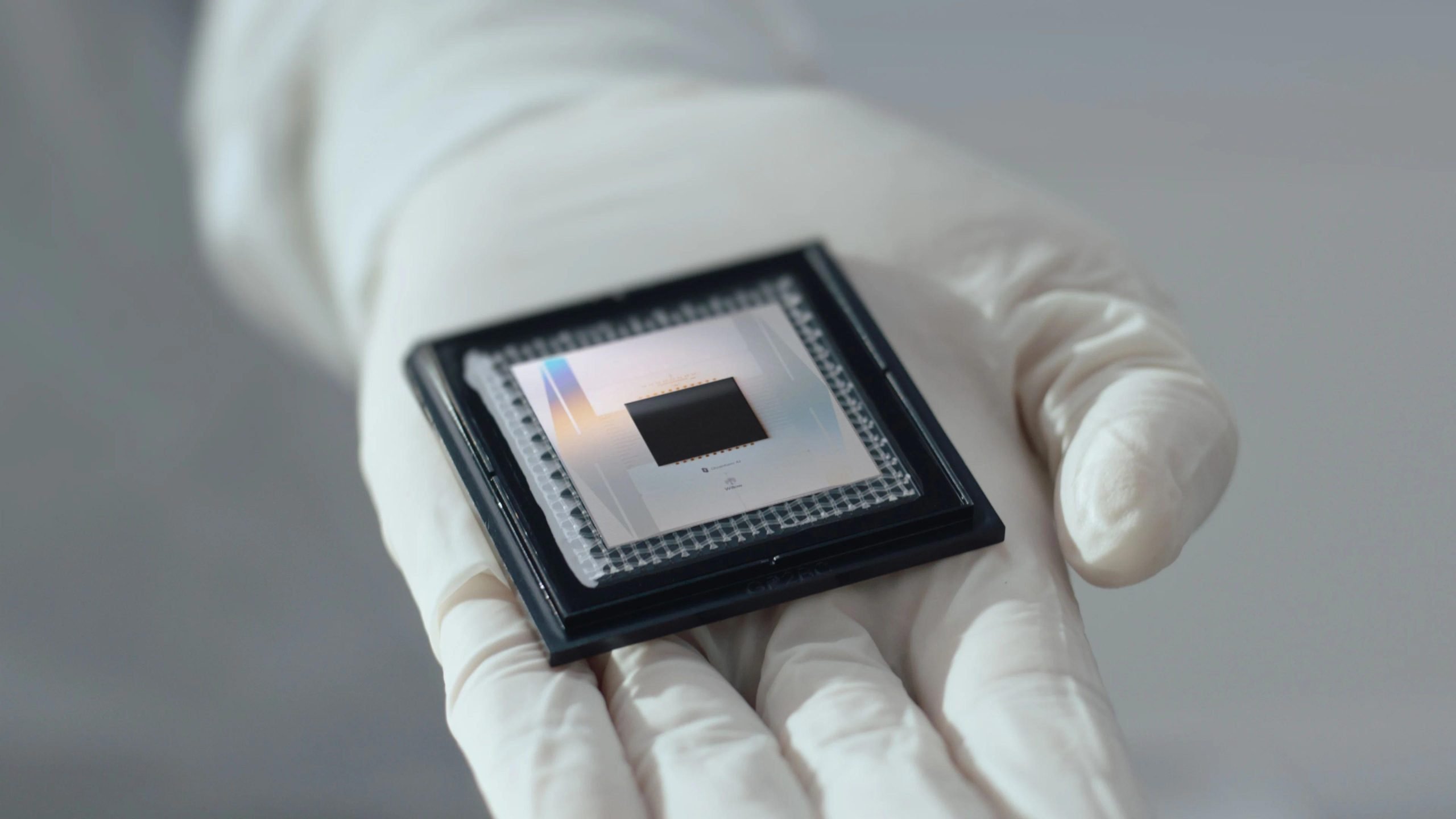

Willow's 105-qubit architecture achieved the first verifiable quantum advantage, demonstrating error suppression below critical thresholds—a milestone decades in the making

Willow's 105-qubit architecture achieved the first verifiable quantum advantage, demonstrating error suppression below critical thresholds—a milestone decades in the making

Understanding Willow's Breakthrough: What Makes It Historic

Google's announcement of Willow wasn't simply about building a larger quantum chip—it was about solving fundamental problems that have limited quantum computing since its inception. To understand why Willow matters, it's essential to grasp what quantum computers are, why they're so difficult to build, and what specific barriers Willow overcame.

Quantum Advantage vs. Quantum Supremacy: The Shift to Practical Problems

The term "quantum supremacy" entered public discourse in 2019 when Google claimed its 54-qubit Sycamore processor completed a calculation in 200 seconds that would take classical supercomputers 10,000 years. While technically impressive, critics noted the calculation was specifically designed to favor quantum systems and had no practical application—it was a demonstration of possibility, not utility.

Willow changes this narrative by achieving "verifiable quantum advantage" on the Quantum Echoes algorithm, which has real-world applications in molecular structure analysis via nuclear magnetic resonance. According to Google's research published in Nature, Willow ran this algorithm 13,000 times faster than classical supercomputers, performing calculations in minutes that would take conventional systems weeks. More importantly, the algorithm's results can be independently verified, eliminating debates about whether quantum speedup is genuine or artifact.

The Quantum Echoes algorithm acts as a "molecular ruler," measuring distances and angles in molecular structures with sensitivity impossible for classical systems. This has immediate applications in drug discovery (understanding how proteins fold and interact), materials science (designing new compounds with specific properties), and fundamental physics research. For the first time, quantum computing has demonstrated clear, verifiable superiority on a problem with practical relevance—not just a contrived benchmark.

Error Suppression: The "Below Threshold" Achievement

Quantum computing's Achilles' heel has always been error rates. Qubits are extraordinarily fragile, losing coherence and introducing errors from electromagnetic interference, temperature fluctuations, and even cosmic rays. Historically, adding more qubits made systems less reliable because each additional qubit introduced new error sources faster than error correction could compensate.

Willow achieved a milestone experts call "below threshold" performance. In quantum error correction, the threshold is the point where adding more qubits reduces errors faster than they accumulate—enabling scalable quantum systems. Google's research demonstrated that as they increased logical qubits from a distance-3 surface code (9 physical qubits) to distance-7 (49 qubits), error rates decreased exponentially. This is the first time any quantum system has crossed this threshold, marking what Hartmut Neven, head of Google Quantum AI, described as a "truly remarkable breakthrough."

The achievement was enabled by hardware improvements in fabrication, reducing defects in superconducting circuits, and better control systems that minimize environmental noise. Google also implemented sophisticated error correction codes that dynamically adjust to error patterns, learning from mistakes in real-time to improve stability. Together, these innovations reduced Willow's error rates to approximately 0.1% per gate operation—still far from the 0.001% target needed for fault-tolerant quantum computing, but a significant step toward practical systems.

Extended Coherence Times: Keeping Qubits Stable

Another critical metric is coherence time—how long qubits maintain quantum states before collapsing. Willow achieved coherence times exceeding 100 microseconds, compared to typical 20-50 microseconds for similar superconducting systems. This matters because longer coherence allows more computational steps before errors accumulate beyond correction.

Recent NIST research extended qubit coherence to 0.6 milliseconds using alternative qubit architectures, suggesting further improvements are possible. Combined with error correction, extending coherence times creates a multiplicative effect: each microsecond of additional stability allows more complex algorithms and reduces the overhead needed for error correction, making quantum systems more practical for longer computations.

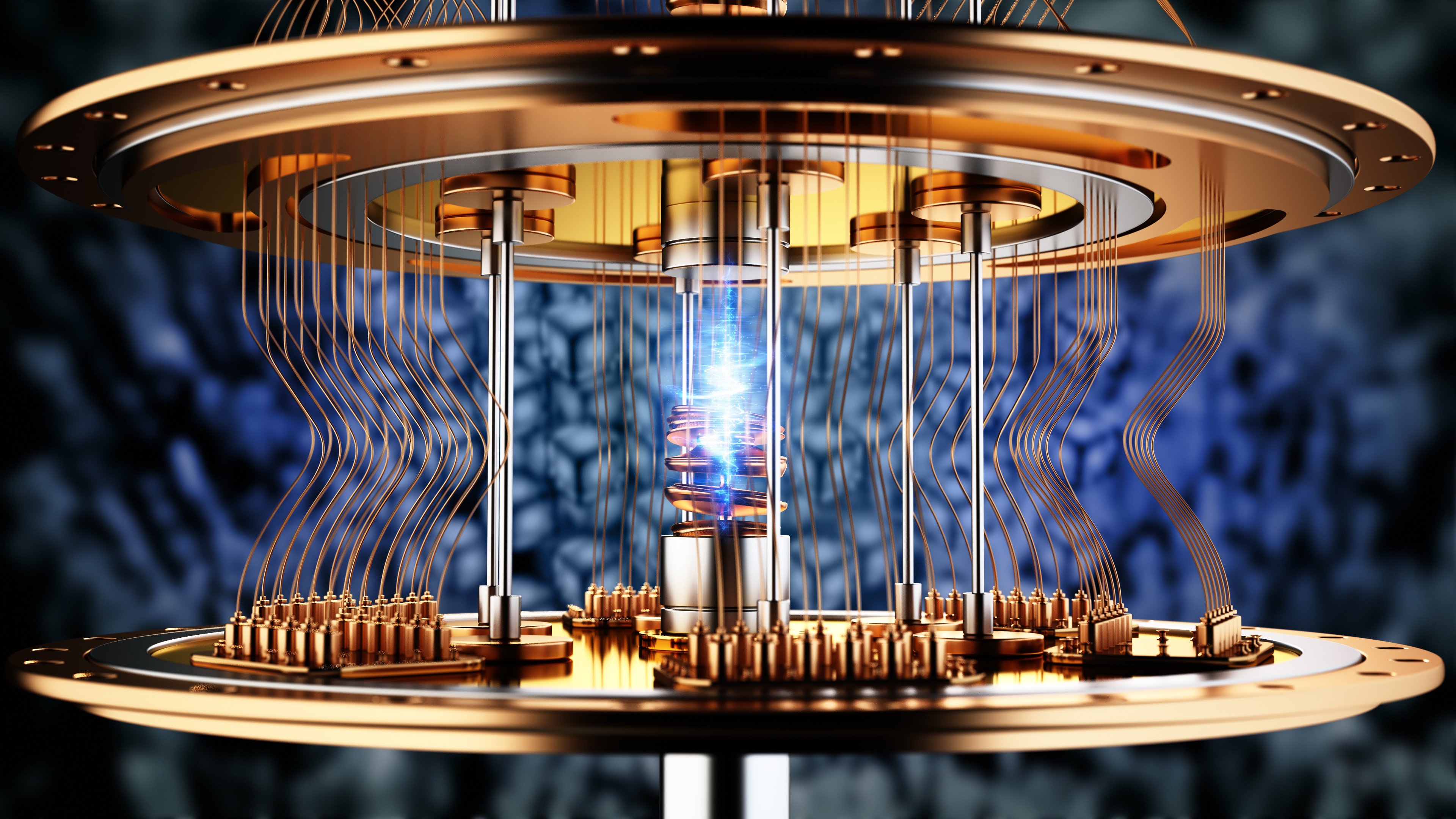

Quantum computers operate at temperatures near absolute zero, requiring sophisticated cryogenic infrastructure to maintain qubit stability

Quantum computers operate at temperatures near absolute zero, requiring sophisticated cryogenic infrastructure to maintain qubit stability

The Competitive Landscape: A Quantum Arms Race

Willow's breakthrough doesn't occur in isolation—it's part of an intensifying race involving tech giants, startups, and governments investing billions in quantum computing. Each player pursues different technical approaches, creating a diverse ecosystem where no single architecture has yet proven definitively superior.

IBM's Quantum Roadmap: Targeting 200 Logical Qubits by 2029

IBM has pursued quantum computing longer than almost any company, and its roadmap remains aggressive. In 2025, IBM announced plans for the Nighthawk processor, expected by year's end, featuring higher qubit counts and integration with classical computing systems. More ambitiously, IBM targets 200 logical qubits by 2029 using low-density parity-check codes that reduce error correction overhead by 90% compared to surface codes.

IBM's strategy emphasizes hybrid quantum-classical systems, recognizing that near-term quantum computers won't operate in isolation but rather as accelerators for specific steps in larger classical workflows. The company's Qiskit software framework has become an industry standard, enabling researchers worldwide to design quantum algorithms and test them on IBM's cloud-accessible quantum systems. This open ecosystem approach contrasts with Google's more closed research model, potentially giving IBM advantages in community adoption even if Google maintains technical leads.

IBM's challenge is translating roadmap ambitions into working hardware. Previous targets have slipped, and the company faces competition from newer, more agile competitors willing to take risks IBM's corporate structure may constrain. Still, IBM's decades of quantum research experience and extensive patent portfolio make it a formidable player that can't be discounted.

Microsoft's Topological Qubits: A Different Bet on Stability

Microsoft has pursued a radically different approach with its Majorana 1 chip, based on topological qubits—theoretical particles that are inherently more stable than superconducting qubits because their quantum information is encoded in the topology of their quantum state rather than fragile energy levels. In 2025, Microsoft announced breakthroughs in topological qubit production, claiming reduced error rates and enhanced stability that could leapfrog competitors.

The challenge with topological qubits is they've proven extraordinarily difficult to manufacture consistently. Microsoft spent years facing skepticism about whether topological qubits were even achievable at scale. Recent progress suggests the technology may be maturing, but Microsoft remains years behind in qubit counts—Majorana 1 has far fewer qubits than Willow, though each may be more reliable.

Microsoft's advantage is its software ecosystem. The company's Q# quantum programming language, Azure Quantum cloud platform, and integration with classical Azure infrastructure create a compelling value proposition: if Microsoft can deliver stable topological qubits, it may attract enterprise customers more readily than competitors because organizations already rely on Microsoft's technology stack.

Amazon's Cat Qubits and IonQ's Trapped Ions: Alternative Architectures

Amazon Web Services entered quantum computing with its Braket platform, offering access to multiple quantum hardware providers. In 2025, Amazon introduced the Ocelot chip featuring "cat qubits"—a superconducting approach using quantum states of light with enhanced error correction properties. Cat qubits promise lower error correction overhead, potentially enabling smaller, more efficient quantum computers.

Meanwhile, IonQ has focused on trapped ion quantum computers, where qubits are individual charged atoms suspended by electromagnetic fields. IonQ's approach achieves higher gate fidelities (accuracy of quantum operations) than superconducting qubits, with recent demonstrations showing 99.9% fidelity—far exceeding Willow's 99.9% per-gate error rate translates to 99.1% fidelity. In 2025, IonQ reported a 12% performance edge over classical computing in medical device simulations and announced strategic acquisitions of Qubitekk and Oxford Ionics to expand quantum networking capabilities.

The trapped ion approach scales differently than superconducting systems. Connecting many trapped ions is challenging, but each ion is identical and highly controllable. IonQ's roadmap targets 1,024 algorithmic qubits (effective qubits after error correction) by 2028—far fewer than superconducting systems' qubit counts, but potentially more useful because of higher quality.

Startups and Wildcards: Infleqtion, Rigetti, and D-Wave

Beyond tech giants, startups bring innovation and risk-taking. Infleqtion showcased its Sqale quantum computer at NVIDIA GTC 2025, demonstrating neutral atom-based technology with plans for a fault-tolerant system with over 1,000 logical qubits by 2030. Neutral atoms, like trapped ions, offer high fidelity but face scaling challenges. Infleqtion's collaboration with NVIDIA on hybrid quantum-AI systems suggests a strategy of positioning quantum as a specialized accelerator for AI workloads.

Rigetti Computing demonstrated real-time error correction in partnership with Riverlane, achieving fast enough correction to prevent error accumulation during computation—a critical requirement for practical quantum systems. D-Wave has taken a completely different approach with quantum annealing, a specialized technique for optimization problems. D-Wave's Advantage2 processor features over 4,400 qubits, far exceeding gate-model quantum computers, but can only solve specific problem types.

This diversity of approaches—superconducting, topological, trapped ion, neutral atom, and quantum annealing—reflects quantum computing's immaturity. In emerging technologies, multiple architectures coexist until one proves definitively superior, as RISC vs. CISC processors did in the 1980s or electric vs. hydrogen vehicles today. The winner may not yet be clear, but Willow's breakthrough suggests superconducting qubits have significant momentum.

The quantum computing race involves thousands of researchers across companies and universities worldwide, pushing the boundaries of physics and engineering

The quantum computing race involves thousands of researchers across companies and universities worldwide, pushing the boundaries of physics and engineering

Market Dynamics: A $20 Billion Bet on Quantum's Future

The quantum computing market in 2025 exhibits characteristics of both rational investment in transformative technology and speculative bubble. Understanding which characterization is more accurate requires examining investment flows, market projections, and the business models emerging around quantum computing.

Explosive Growth Projections: $3.5B to $20.2B by 2030

Multiple research firms project the quantum computing market growing from $3.5 billion in 2025 to $20.2 billion by 2030, representing a 41.8% compound annual growth rate. These projections account for hardware sales, cloud-based quantum computing services (Quantum-as-a-Service), software platforms, and consulting services helping enterprises identify quantum use cases.

The growth drivers are straightforward: as quantum computers cross practical thresholds, more applications become economically viable, expanding the addressable market. Early adopters in pharmaceuticals, finance, and materials science are willing to pay premium prices for access to quantum resources, even in current limited form, because the potential competitive advantages—discovering blockbuster drugs, optimizing portfolios, designing revolutionary materials—justify high R&D expenditures.

However, projections also embed assumptions that quantum computing will achieve technical milestones on aggressive timelines. If error rates don't continue improving, if scaling stalls, or if classical computing advances faster than expected (potentially solving problems thought to require quantum approaches), market growth could disappoint. The history of technology is littered with overhyped markets—from 3D printing to virtual reality to blockchain—where growth projections assumed smooth technical progress that reality didn't deliver.

Investment Surge: $2B+ in Venture Capital, But Stock Volatility Raises Concerns

Venture capital investments in quantum computing exceeded $2 billion in 2025, with major funding rounds for IonQ, Rigetti, PsiQuantum, and others. Institutional investors including JPMorgan Chase have committed billions to quantum strategic technologies, viewing quantum as a long-term competitive necessity similar to AI investments.

Government funding has also accelerated. The U.S. government contracted Nvidia to build seven supercomputers integrating quantum accelerators, signaling official recognition of quantum's strategic importance. Maryland has positioned itself as a quantum hub, with Microsoft's center at the University of Maryland, IonQ's expansions, and state-backed investments supported by DARPA. Globally, China's quantum funding and Europe's Quantum Flagship program intensify the race, framing quantum computing as a matter of national competitiveness.

Yet stock market performance for pure-play quantum companies has been volatile. IonQ and Rigetti saw massive gains in late 2025 amid contract wins and technical announcements, but corrections followed as analysts questioned valuations. Some observers, including The Motley Fool, cautioned about potential quantum computing stock bubbles, noting that companies with minimal revenue are valued at billions based on future potential rather than current earnings.

The risk is that hype-driven valuations become disconnected from business fundamentals. IonQ, for example, relies heavily on stock issuance and government contracts for funding, with commercial revenue still modest. If quantum commercialization takes longer than markets expect, valuations could collapse, similar to the dot-com bust where real innovations were drowned in speculation that outpaced reality.

Quantum-as-a-Service: Democratizing Access or Delaying Monetization?

One business model gaining traction is Quantum-as-a-Service (QaaS), where companies like IBM, Microsoft, Amazon, and startups like SpinQ offer cloud-based access to quantum computers. Instead of requiring customers to purchase and maintain multimillion-dollar quantum systems, QaaS allows researchers and enterprises to run quantum algorithms via cloud APIs, paying per use.

QaaS democratizes quantum access, enabling universities, startups, and enterprises to experiment without massive capital investments. This accelerates learning and application development, building the ecosystem needed for eventual widespread adoption. IBM Quantum, Microsoft Azure Quantum, and Amazon Braket have onboarded thousands of users, creating a community exploring quantum's potential.

However, QaaS also delays monetization. Current quantum computers are too limited for most commercial applications, meaning QaaS revenue remains modest—primarily from research institutions and pilot projects rather than production workloads generating recurring revenue. For pure-play quantum companies, this creates a cash flow challenge: they must continue raising capital to fund hardware development while commercial revenue remains years away.

The QaaS model mirrors cloud computing's early days, when AWS launched in 2006 with limited services before scaling to a $90 billion business. If quantum follows a similar trajectory, today's investments will seem prescient. But if technical barriers delay commercialization beyond investors' patience, the QaaS model could become a "bridge to nowhere," providing access without generating returns.

Financial services firms are investing heavily in quantum computing for portfolio optimization, risk analysis, and post-quantum cryptography—applications that could justify quantum's cost in near-term deployments

Financial services firms are investing heavily in quantum computing for portfolio optimization, risk analysis, and post-quantum cryptography—applications that could justify quantum's cost in near-term deployments

Applications on the Horizon: What Quantum Computing Will Actually Do

Despite quantum computing's mystique, its practical applications are surprisingly specific. Understanding what quantum computers will and won't be good at clarifies why companies are investing billions and which industries will see impact first.

Drug Discovery and Molecular Simulation: The Killer App?

Quantum computers excel at simulating quantum systems—atomic and molecular interactions that classical computers struggle to model accurately. This makes drug discovery and materials science perhaps the most promising near-term application. Pharmaceutical companies spend billions and decades bringing new drugs to market, with much of that time devoted to understanding how molecular compounds interact with biological targets.

Willow's Quantum Echoes algorithm directly addresses this by measuring molecular structures with unprecedented precision. Imagine designing a drug molecule and being able to simulate precisely how it will bind to a target protein, predict side effects, and optimize its structure—all in silico, before expensive laboratory testing. This could compress drug development timelines from 10-15 years to 5-7 years, saving billions and bringing treatments to patients faster.

Companies like Amgen have partnered with quantum computing providers to explore applications in protein folding and drug interaction modeling. While current quantum computers lack the scale for full drug simulation, incremental progress is occurring. Quantum-assisted algorithms already help narrow the search space for drug candidates, and as quantum systems scale, they'll handle more complex simulations classically intractable.

Materials science offers parallel opportunities. Designing new battery materials, superconductors, or catalysts requires understanding quantum mechanical properties at atomic scales. Quantum computers could accelerate discovery of materials for energy storage, carbon capture, or electronics—applications with massive economic and environmental implications.

Financial Optimization and Risk Analysis: Where Money Meets Quantum

Financial institutions are among the most enthusiastic quantum computing investors because several core problems—portfolio optimization, risk modeling, and derivative pricing—are computationally intensive and potentially quantum-solvable.

Portfolio optimization involves selecting asset combinations that maximize returns while minimizing risk, considering thousands of securities with complex correlations. This is mathematically an optimization problem that classical computers solve via approximations. Quantum algorithms like QAOA (Quantum Approximate Optimization Algorithm) could find better solutions faster, potentially improving returns by fractions of a percent—which translates to millions in profit for large funds.

JPMorgan Chase, Goldman Sachs, and other institutions have established quantum research teams exploring these applications. Current quantum computers can't yet outperform classical systems on real portfolios (which involve tens of thousands of assets), but proof-of-concept demonstrations show promise. As quantum systems scale, finance may be an early commercial market because even small advantages justify quantum's costs when applied to trillion-dollar asset pools.

Cryptography is the flip side: quantum computers threaten current encryption methods. Shor's algorithm, a quantum algorithm demonstrated decades ago, can factor large numbers exponentially faster than classical computers—breaking RSA encryption that secures internet communications, financial transactions, and government secrets. This has spurred development of post-quantum cryptography, encryption methods resistant to quantum attacks.

The timeline for cryptographically-relevant quantum computers (CRQCs) remains uncertain—estimates range from 2030 to 2040+—but organizations are already transitioning to quantum-resistant encryption. The National Institute of Standards and Technology (NIST) has standardized post-quantum cryptographic algorithms, and governments mandate their adoption for classified systems. Ironically, the threat of quantum computing is driving commercial opportunity in quantum-safe security solutions.

Logistics and Supply Chain Optimization: Quantum Tackles NP-Hard Problems

Another promising application is optimization problems in logistics and supply chain management. These problems—like vehicle routing (finding optimal delivery paths for fleets), job scheduling, or network design—are often NP-hard, meaning classical computers can't solve them exactly in reasonable time for large instances, requiring approximations.

Quantum annealing systems like D-Wave's are specifically designed for optimization, and companies like Volkswagen, Airbus, and DHL have piloted quantum algorithms for logistics. Results are mixed: in some cases, quantum systems found better solutions faster; in others, classical algorithms optimized for specific problems performed just as well.

The key insight is that quantum computing won't replace classical systems for all optimization—it will be used selectively for problems where classical approaches struggle. As quantum hardware matures and algorithms improve, more logistics applications will justify quantum's cost by saving fuel, reducing delivery times, or improving resource utilization in ways that generate measurable ROI.

Machine Learning and AI: Quantum's Role in the AI Era

The intersection of quantum computing and artificial intelligence is tantalizing but speculative. Quantum machine learning algorithms promise faster training of AI models or better handling of certain data structures, but practical demonstrations remain limited.

Google's collaboration with NVIDIA on hybrid quantum-AI systems explores using quantum processors as specialized accelerators for AI workloads. The idea is that certain steps in machine learning—like sampling from complex probability distributions or optimizing high-dimensional loss landscapes—might benefit from quantum speedup. However, most AI's computational load involves matrix multiplications on GPUs, a task where classical systems are highly optimized and quantum computers offer no clear advantage.

Quantum machine learning may become important in niche applications—like quantum chemistry data where quantum computers naturally handle quantum information—but expectations should be tempered. AI's explosive growth is driven by scaling classical neural networks, and quantum computing won't replace that anytime soon.

Quantum computing leverages superposition and entanglement—quantum phenomena with no classical analog—to process information in fundamentally new ways

Quantum computing leverages superposition and entanglement—quantum phenomena with no classical analog—to process information in fundamentally new ways

Challenges and Roadblocks: Why Quantum's Future Isn't Guaranteed

Despite breakthroughs like Willow, formidable challenges could slow or even derail quantum computing's path to widespread impact. Recognizing these obstacles provides a more balanced view of the technology's prospects.

The Error Correction Overhead Problem: Still Far from Fault Tolerance

While Willow achieved below-threshold error correction, the overhead remains enormous. Current error correction schemes require 10-100 physical qubits to encode a single logical qubit with sufficient reliability for meaningful computation. Google's demonstration used 49 physical qubits to achieve one highly reliable logical qubit—an overhead ratio that makes large-scale quantum computing prohibitively expensive.

To run Shor's algorithm to break RSA-2048 encryption (a benchmark for cryptographically-relevant quantum computing), estimates suggest needing ~20 million physical qubits with error rates comparable to Willow's. Scaling from 105 qubits to millions while maintaining error rates is an engineering challenge comparable to building fusion reactors or sending humans to Mars—not impossible, but requiring decades of sustained effort and investment.

Researchers are exploring lower-overhead error correction codes. IBM's low-density parity-check codes promise 90% overhead reductions, and Microsoft's topological qubits inherently need less correction. But even with improved codes, the path from hundreds of qubits to millions spans years or decades, and each scaling step introduces new engineering challenges that could slow progress.

Energy and Infrastructure Costs: The Physics of Ultra-Cold Computing

Quantum computers operate at temperatures near absolute zero (around 15 millikelvins), requiring sophisticated dilution refrigerators that consume significant energy. While a quantum computer's qubits may perform calculations with quantum efficiency, the classical infrastructure supporting them—cryogenic systems, control electronics, error correction processors—consumes megawatts.

As quantum systems scale, energy costs could become prohibitive. A million-qubit quantum computer might require a dedicated power plant, raising questions about economic viability for all but the most valuable applications. This creates a paradox: quantum computers are most valuable for problems requiring large-scale quantum systems, but large-scale systems may be too expensive to operate except for applications with extraordinary economic returns.

Research into alternative qubit technologies (like room-temperature photonic qubits or topological qubits with less stringent cooling requirements) aims to address this, but those approaches face their own challenges. The fundamental tension between quantum's need for isolation from environmental noise and the practical need for affordable, scalable systems remains unresolved.

Talent Shortage: Too Few Quantum Experts for Too Many Companies

Quantum computing requires expertise at the intersection of quantum physics, computer science, electrical engineering, and mathematics—a rare combination. Universities produce hundreds of quantum PhDs annually, but industry demand exceeds supply by orders of magnitude. Google, IBM, Microsoft, Amazon, and dozens of startups compete for the same small pool of talent, driving salaries to Silicon Valley highs while leaving many positions unfilled.

This talent bottleneck slows progress. Building quantum computers requires not just theoretical knowledge but practical engineering—designing superconducting circuits, optimizing control systems, developing error correction codes, and creating quantum algorithms. Each role demands years of specialized training, and companies can't simply "hire more people" to accelerate development because qualified people don't exist in sufficient numbers.

Educational initiatives are expanding quantum curricula, and companies like SpinQ offer educational quantum computers to train the next generation. But producing quantum-literate engineers takes 4-6 years (bachelor's and master's degrees), meaning today's talent shortage will persist until at least 2030. This constrains how quickly the industry can scale, even if hardware breakthroughs continue.

The Classical Computing Counterfactual: Moore's Law Isn't Dead Yet

A sobering consideration is that classical computing continues improving. While Moore's Law (doubling transistor density every two years) has slowed, classical systems are advancing through new architectures, specialized AI accelerators, and algorithmic improvements. Google's Willow demonstrated 13,000x speedup on a specific problem, but classical algorithms for similar problems continue improving too.

In some cases, classical computers have caught up to quantum claims. When Google announced quantum supremacy in 2019, IBM later demonstrated that classical supercomputers could solve the same problem faster than Google initially estimated. This pattern repeats: quantum achieves a milestone, then classical systems find optimizations that narrow the gap. The question isn't whether quantum will someday exceed classical for certain problems—physics strongly suggests it will—but whether that advantage will materialize soon enough and be large enough to justify quantum's costs.

For many applications, "good enough" classical solutions may suffice. If a classical algorithm solves a portfolio optimization problem in one hour with 95% accuracy, and a quantum algorithm solves it in 10 minutes with 98% accuracy, the quantum advantage exists but may not justify the infrastructure investment. Quantum computing needs to demonstrate not just speedup, but transformative capability—solving problems genuinely impossible classically, not just faster.

Strategic Implications: How Organizations Should Prepare for the Quantum Era

For businesses, investors, and policymakers, quantum computing occupies an awkward zone between hype and reality. Ignoring it risks missing transformative technology; overcommitting risks wasting resources on immature systems. Strategic quantum preparation requires balancing these extremes.

For Enterprises: Explore, But Don't Overcommit

Organizations in pharmaceuticals, finance, logistics, and materials science should establish quantum pilot programs—small teams exploring potential use cases, experimenting with QaaS platforms, and building internal expertise. These efforts cost relatively little (low six figures annually) but position companies to capitalize when quantum systems mature.

However, enterprises should avoid betting core operations on quantum computing in the near term. Current systems can't replace classical infrastructure, and most business problems remain solvable with conventional computing. The goal is strategic optionality: understanding quantum well enough to act quickly when it becomes practical, without overinvesting in technology still years from commercial viability.

Partnerships with quantum computing providers offer a pragmatic path. IBM Quantum Network, Microsoft Azure Quantum, and Amazon Braket offer structured programs helping enterprises identify quantum-suitable problems and develop proof-of-concept applications. These partnerships provide access to cutting-edge hardware without requiring internal quantum infrastructure, reducing risk.

For Investors: Quantum Offers Asymmetric Risk-Reward

Quantum computing presents classic venture capital dynamics: high risk, high potential reward. Pure-play quantum companies like IonQ and Rigetti are speculative investments—they could become enormously valuable if quantum commercializes as hoped, or could fail if technical progress stalls or incumbents dominate.

Diversification is prudent. Rather than betting on a single quantum architecture, investors should spread bets across superconducting, trapped ion, topological, and other approaches, recognizing that no architecture has yet proven definitively superior. Exposure to quantum through diversified tech ETFs or large tech companies (Google, Microsoft, IBM, Amazon) offers lower risk by embedding quantum in broader portfolios balanced with profitable core businesses.

For investors with high risk tolerance, quantum computing's early stage offers opportunities reminiscent of early cloud computing or AI investments. But the failures will likely outnumber successes, and even successful companies may take a decade to generate returns. Patient capital with 10+ year horizons is suited to quantum; investors needing near-term liquidity should approach cautiously.

For Policymakers: Balancing Competition with Security

Quantum computing has become a focal point of geopolitical tech competition. China's massive quantum funding, Europe's Quantum Flagship initiative, and U.S. government investments frame quantum as a strategic technology whose dominance confers national advantages in cryptography, defense, and economic competitiveness.

Policymakers face difficult trade-offs. Export controls on quantum technology (like those on advanced AI chips) could prevent adversaries from gaining quantum capabilities but also slow progress by fragmenting the research community and limiting collaborations. Open publication of quantum research accelerates global progress but shares breakthroughs with competitors. Finding the right balance between openness and security is critical.

Investment in quantum education and research infrastructure is essential. Maryland's emergence as a quantum hub demonstrates how targeted state support—university investments, business incentives, DARPA partnerships—can create innovation clusters. Replicating this in more regions builds resilience against concentration risk while broadening the talent pipeline.

Finally, policymakers must prepare for quantum's disruptive impact on cybersecurity. Transition to post-quantum cryptography is urgent—even if CRQCs are 10-15 years away, adversaries can "harvest now, decrypt later," capturing encrypted data today to decrypt when quantum computers become available. Mandating quantum-resistant encryption for critical infrastructure, financial systems, and government communications is a prudent precaution that doesn't require betting on quantum's timeline.

Conclusion: Willow's Place in Quantum's Long Arc

Google's Willow chip is undeniably a milestone—the first quantum processor to achieve verifiable quantum advantage on a practical problem while demonstrating below-threshold error correction. These achievements move quantum computing from theoretical possibility to engineering challenge, showing that the fundamental physics works and the path to scalable systems exists.

Yet milestones don't guarantee destiny. Quantum computing has been "five to ten years away" from commercial viability for decades, a running joke in the field reflecting the gap between laboratory breakthroughs and production systems. Willow narrows that gap, but doesn't close it. The journey from 105 qubits to the millions needed for transformative applications spans at least a decade of sustained engineering progress, with each scaling step introducing new challenges.

The market dynamics surrounding quantum—$2 billion in venture capital, $20 billion projections, volatile stock prices—exhibit characteristics of both rational long-term investment and speculative bubble. Quantum computing will almost certainly transform certain industries, but the timeline, scale, and distribution of benefits remain highly uncertain. Investors and enterprises betting heavily on quantum must accept substantial risk that technical or commercial hurdles could delay returns beyond their patience.

For society, quantum computing represents both opportunity and challenge. The same capabilities that could revolutionize drug discovery and materials science could also break encryption protecting financial systems and communications. The geopolitical quantum race could accelerate progress through competition or create fragmentation that slows innovation. And the resource intensity of quantum computing—specialized talent, massive energy consumption, complex infrastructure—raises questions about equitable access and environmental sustainability.

Willow's breakthrough demonstrates that quantum computing is real, progressing, and worthy of serious attention. But between "working in the lab" and "transforming the world" lies a vast expanse of engineering, investment, patience, and luck. The quantum revolution has begun, but its arc will unfold over decades, not years—a marathon that has just completed an important early mile.

The question isn't whether quantum computing will arrive, but whether stakeholders—from researchers to investors to policymakers—can sustain the commitment needed to carry it from breakthrough to impact. Willow provides reason for optimism that the journey is possible. Whether it proves worthwhile depends on what happens next.

References and Sources

This analysis draws on the following sources and research:

- "Google Quantum Echoes with Willow: First Verifiable Quantum Advantage" - Google AI Blog (https://blog.google/technology/research/quantum-echoes-willow-verifiable-quantum-advantage/)

- "Google's Quantum Breakthrough is Truly Remarkable—But There's More to Do" - ZDNet Analysis (December 2024)

- "Top Quantum Computing Companies in 2025" - The Quantum Insider (https://thequantuminsider.com/2025/09/23/top-quantum-computing-companies/)

- "Quantum Computing Industry Trends 2025: Breakthrough Milestones & Commercial Transition" - SpinQuanta (https://www.spinquanta.com/news-detail/quantum-computing-industry-trends-2025-breakthrough-milestones-commercial-transition)

- "Infleqtion to Showcase Quantum Computing Innovation at NVIDIA GTC" - Business Wire (October 22, 2025)

- "Is It Time to Sell Your Quantum Computing Stocks?" - The Motley Fool (October 19, 2025)

- "Maryland Poised to Benefit as Quantum Computing Market Booms" - CityBiz Analysis (2025)

- "TIME Best Inventions 2025: Industry-Wide Quantum Chip Advancements" - TIME Magazine (https://live.staticflickr.com/4403/23518086798_3d3af8313e_b.jpg)

- IBM Quantum Roadmap and Technical Reports (2025)

- Microsoft Azure Quantum Documentation and Majorana Qubit Research (2025)

- Amazon Web Services Braket Quantum Computing Platform Documentation

- IonQ Investor Relations and Technical Presentations (2025)

- Nature Journal - Google Quantum AI Research Publications (2024-2025)

- NIST Quantum Computing and Post-Quantum Cryptography Standards

- Forrester Research - "Quantum Computing Market Forecasts 2025-2030"

- Markets and Markets - "Quantum Computing Market Report" (2025 projections)

- McKinsey Digital - "The Top Trends in Tech: Quantum Computing" (2025)

- Stanford University - "The AI Index Report" (quantum computing section)

- MIT Technology Review - "Quantum Computing: When Will It Be Useful?"

- Various quantum computing company announcements and technical disclosures from Rigetti Computing, D-Wave Systems, PsiQuantum, Atom Computing, and others

- U.S. Department of Energy - Quantum Information Science Research Programs

- European Quantum Flagship Initiative Documentation

- China Academy of Sciences - Quantum Computing Research Reports

- Academic papers on quantum error correction, published in Physical Review Letters, Science, and Nature Physics (2024-2025)

This analysis is for informational purposes only and does not constitute investment, technical, or strategic advice. Quantum computing is an emerging field with significant uncertainties. Organizations should conduct independent due diligence and consult quantum computing experts before making strategic or investment decisions based on this information.

Disclaimer: This analysis is for informational purposes only and does not constitute investment advice. Markets and competitive dynamics can change rapidly in the technology sector. Taggart is not a licensed financial advisor and does not claim to provide professional financial guidance. Readers should conduct their own research and consult with qualified financial professionals before making investment decisions.

Taggart Buie

Writer, Analyst, and Researcher